OpenStack之2023新实战

参考资料

物理基础设施层

基础硬件设备

| 服务器硬件要求 | 保证高可用高可靠 |

|---|---|

| 交换机物理堆叠(IRF) | 防止交换机单点故障 |

| 交换机端口聚合(LACP) | 互冗互备,提升性能 |

| 服务器多网卡绑定聚合 | bond mode=2/4/6,互冗互备,提升性能 |

| 计算节点的资源CPU一致 | 保证虚拟机的平滑迁移 |

| 计算节点的内存也应该一致 | 保证虚拟机创建管理的均衡调度,尽量不要超卖 |

系统安装及要求

硬件要求:

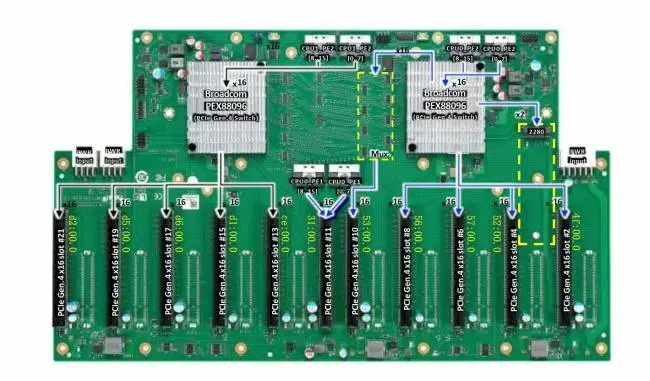

使用方式:独占,裸金属 服务器:液冷人工智能一体机 CPU:2 * Intel Platinum 8352V 第三代英特尔® 至强® 可扩展处理器,36核心 超线程 3.50 GHz 内存:16 * 64GB 三星 DDR4 ECC 共1024G内存 系统盘:1 * U2固态硬盘 Gen3三星PM983容量1.92TB 数据盘:3 * 三星PM9A3 NVMe® U.2 7.68TB PCIe 4.0 SSD 网卡:Mellanox cx5 25Gb 单网口 带模块 电源:CRPS 2400W热拔插电源模块(2+2冗余) GPU:8 * RTX 4090 24GB 半精度性能 :8 * 165.2 TFLOPS 单精度性能:8 * 82.58 TFLOPS 出口配置2台万兆防火墙加固网络安全,并保障链路稳定性

操作系统:

网络配置:

* 每台服务器接两个网口,万兆起; * 一个公网口直接分配公网ip * 一个内网口都划在同一vlan。

系统分区及最小化安装:

/ 根分区大小 40G swap 交换分区大小 16G

# 隔离其余核 GRUB_CMDLINE_LINUX_DEFAULT="isolcpus=4,5,6,7,8,9,10,11,12" # 建议值是预留前几个物理 CPU vcpu_pin_set = 4-31 # 物理CPU超售比例,默认是16倍,超线程也算作一个物理 CPU cpu_allocation_ratio = 8 # 允许在同一台主机上扩容,这样速度最快 allow_resize_to_same_host = true ### 内存配置 ### # 关闭KVM内存共享 echo 0 > /sys/kernel/mm/ksm/pages_shared echo 0 > /sys/kernel/mm/ksm/pages_sharing # 开启透明大页 echo always > /sys/kernel/mm/transparent_hugepage/enabled echo never > /sys/kernel/mm/transparent_hugepage/defrag echo 0 > /sys/kernel/mm/transparent_hugepage/khugepaged/defrag # 内存分配超售比例,默认是1.5倍,生产环境不建议开启超售 ram_allocation_ratio = 1 # 内存预留量,这部分内存不能被虚拟机使用,以便保证系统的正常运行 reserved_host_memory_mb = 10240

OpenStack云平台层

网络节点

- neutrondhcpagent:在多个网络节点上部署DHCP Agent,实现HA

- neutronl3agent:成熟主流的有VRRP 和DVR两种方案

# DHCP的调度程序会为同一网络启动X个DHCP Agents dhcp_agents_per_network = X # 所有的路由默认使用HA模式 l3_ha = True # 根据网络节点的数量设置最大最小值 max_l3_agents_per_router = 2 min_l3_agents_per_router = 2

计算节点

- Nova-Compute:监控异常、隔离节点、恢复节点(nova service-force-down)

- neutronopenvswitchagent:ovs agent在所有的计算节点上部署,本身就是分布式

- neutronlinuxbridgeagent:bridge agent在所有的计算节点上部署,本身就是分布式

- cinder-volume(如果后端使用共享存储,建议部署在controller节点)

- 虚拟机实例:可以使用分布式健康检查服务Consul、启用 hw:watchdog_action=reset

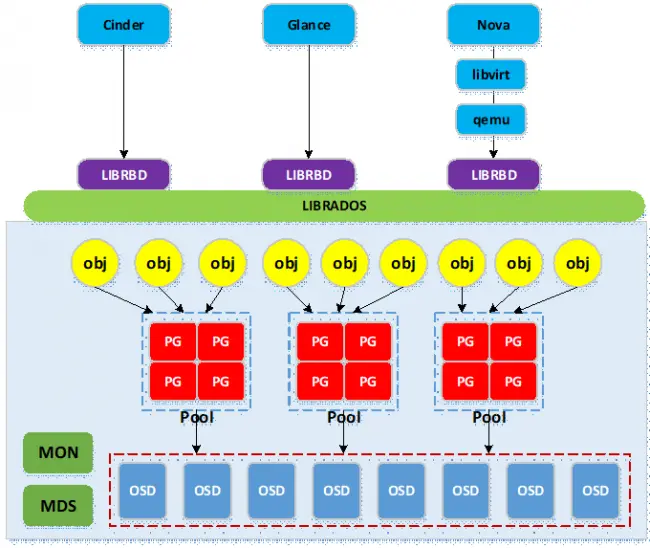

存储节点

- cinder-volume:单机部署,仅供测试用

- Ceph:大规模分布式,性能好,易于维护,对osd数量要求多

GlusterFS

- Ceph公共网络和集群网络,都应该单独分开。

- Ceph存储节点使用的网卡,建议做多网卡Bonding

- SSD创建容量相对较小但性能高的缓存池(Cache tier)

- SATA硬盘创建容量大的但性能较低的存储池(Storage tier)

- 生产环境建议单独创建4个存储资源池(Pool)分别对应OpenStack的4种服务存储

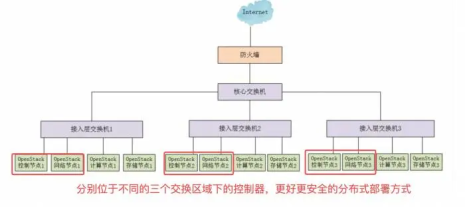

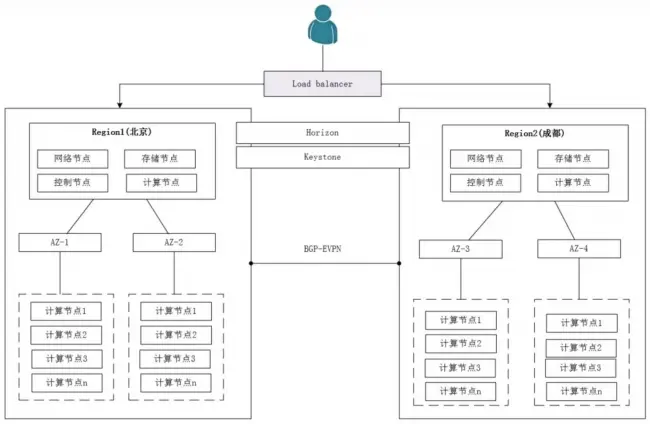

多Region、多AZ、多HA

- Region(多区域)

- Availability Zone(多可用区)

- Host Aggregate(主机聚合)

一个地理区域Region包含多个可用区AZ (availability zone),

同一个AZ中的计算节点又可以根据某种规则逻辑上的组合成一个组。

OpenStack网络概念

ML2和L3的作用

在OpenStack中,ML2是一种网络插件,它用于管理虚拟网络和物理网络之间的连接。ML2支持多种机制驱动程序,包括VLAN、GRE、VXLAN等,这些驱动程序可以用于不同的网络部署场景。

L3路由是一种网络服务,它允许虚拟机之间或虚拟机与外部网络之间进行通信。L3路由通常由路由器提供,它将数据包从一个子网转发到另一个子网。

在OpenStack中,L3路由服务可以通过Neutron网络服务提供。当您使用ML2网络插件时,可以使用L3路由服务将虚拟机连接到外部网络。L3路由服务可以通过Neutron API和命令行工具进行配置和管理。

总之,ML2是一种网络插件,它用于管理虚拟网络和物理网络之间的连接;而L3路由是一种网络服务,它允许虚拟机之间或虚拟机与外部网络之间进行通信。在OpenStack中,您可以使用ML2和L3路由服务来构建和管理虚拟网络。

Neutron使用OVN还是Bridge

在OpenStack中,Neutron可以使用多种网络插件来管理虚拟网络。其中,OVN和Linuxbridge是两种常用的网络插件,它们都可以用于构建和管理虚拟网络。

OVN是一种基于OVS(Open vSwitch)的网络插件,它支持多种网络拓扑和服务,包括虚拟机、容器、L2/L3网络和安全组等。OVN提供了分布式的逻辑路由和逻辑交换机功能,可在多个计算节点之间分布虚拟网络的工作负载,从而提高网络性能和可靠性。

Linuxbridge是一种简单的网络插件,它使用Linux内核的桥接功能来实现虚拟网络。Linuxbridge插件适用于小规模的OpenStack部署,它提供了基本的虚拟网络功能,如VLAN隔离、DHCP、路由和安全组等。

当选择OVN或Linuxbridge时,您应该考虑以下几个因素:

性能:如果您需要高性能的虚拟网络,可以选择OVN插件,因为它提供了分布式的逻辑路由和逻辑交换机功能,可以提高网络性能和可靠性。

功能:如果您需要更复杂的虚拟网络拓扑和服务,可以选择OVN插件,因为它支持多种网络拓扑和服务,包括虚拟机、容器、L2/L3网络和安全组等。

管理复杂度:如果您需要简单的虚拟网络拓扑和服务,可以选择Linuxbridge插件,因为它使用Linux内核的桥接功能来实现虚拟网络,管理复杂度较低。

社区支持和文档:在选择OVN或Linuxbridge时,您应该考虑社区支持和文档的质量和数量,以便在需要时获取帮助和支持。

综上所述,选择OVN还是Linuxbridge取决于您的具体需求和情况。如果您需要更高级的网络功能和性能,可以选择OVN插件;如果您需要简单的网络拓扑和服务,可以选择Linuxbridge插件。

VLAN和VxLAN如何选择

VLAN和VXLAN的区别即在于,VLAN是一种大二层网络技术,不需要虚拟路由转换,性能相对VXLAN、GRE要好些,支持4094个网络,架构和运维简单。VXLAN是一种叠加的网络隧道技术,将二层数据帧封装在三层UDP数据包里传输,需要路由转换,封包和解包等,性能相对VLAN要差些,同时架构也更复杂,好处是支持1600多万个网络,扩展性好。

在VXLAN和VLAN网络通信,即租户私网和Floating IP外网路由转发通信背景下,默认在OpenStack传统的集中式路由环境中,南北流量和跨网络的东西流量都要经过网络节点,当计算节点规模越来越大的时候,网络节点很快会成为整个系统的瓶颈,为解决这个问题引入了Distribute Virtual Router (DVR)的概念。使用DVR方案,可以将路由分布到计算节点,南北流量和跨网段的东西流量由虚机所在计算节点上的虚拟路由进行路由,从而提高稳定性和性能。

keystone监听两个端口区别

在keystone v2 版本中,这两个端口对应的API一个是Identity Admin API,一个是Identity API

如果是使用keystone v3 版本的话,这两个端口的使用基本没有区别,API 是一致的。

openstack endpoint create --region RegionOne identity admin http://controller:35357/v3批量修改endpoint

openstack endpoint list|awk '/db.service/{gsub("db.service","cc.service",$(NF-1)); print "openstack endpoint set --url",$(NF-1),$2}'

Ironic裸机与nova虚拟机区别

Ironic是裸机管理,可以类同为企业的IT资产管理系统,而Nova是提供裸机服务的,可以认为是给用户分配物理服务器的。底层技术实现上,Ironic是Nova的其中一种ComputeDrive,和Libvirt平行,一个裸机node对应Nova的一个Hypervisor实例。

虚拟机创建的过程是compute节点向Glance下载镜像到本地目录,定义虚拟机xml模板,镜像文件映射为虚拟机的一个虚拟块设备,调用libvirt启动虚拟机。而裸机部署则相对复杂些。首先conductor节点会启动一个TFTP服务,保存有操作系统的bootloader文件,而Neutron可提供DHCP服务,并提供TFTP服务的地址。Ironic会首先从Glance下载initramfs镜像到conductor节点的TFTP路径,然后通过IPMI命令开机服务器,并设置从PXE启动(网卡需要提前开启PXE功能)。裸机服务器PXE引导后,自动从TFTP下载bootloader文件,bootloader会告诉服务器加载deploy initramfs和deploy kernel,启动deploy操作系统。deploy操作系统仅仅是一个临时的操作系统,并没有安装到硬盘,可以认为是一个内存精简操作系统,该操作系统除了安装一些必要的驱动和工具外,还安装了ironic-python-agent。

虚拟机如何通过cloud-init获取metadata

检查libvirtd的日志,必要时neutron主控程序也重启一下。

OpenStack的高级配置

VT-x: enable Advanced ⇒ CPU Configuration ⇒ Intel Virtualization Technology

开启vt-d才能io虚拟化。AMD平台是iommu,某些OEM主板上叫SRIOV

配置虚拟机GPU直通

✅ vfio-pci: probe failed with error -22

✅ vfio X: group X is not viable

lspci -nnv -s 02:00|grep -i "Kernel driver"

要所有的设备全部支持vfio-pci驱动才行,尤其是显卡自带的声卡和USB,使用命令修改驱动:

driverctl set-override 0000:0c:00.4 vfio-pci

设置IOMMU

intel_iommu=on iommu=pt

vfio驱动隔离PCIE

Isolating the GPU with VFIO

vfio-pci.ids=10de:1b81,10de:10f0 vfio_iommu_type1.allow_unsafe_interrupts=1 modprobe.blacklist=nvidiafb,nouveau

PCIE ID映射到卡虚拟机instance

nvidia-smi -a |grep -i 'bus id' lspci | grep -i vga | grep -i nvidia sed -r -n '/hostdev/, /\/hostdev/{/address/p}' /etc/libvirt/qemu/instance-00000e37.xml

获取硬件信息ID

01:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1070] [10de:1b81] (rev a1)

01:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

#检测 GPU 带宽 lspci -vv | awk '/VGA.+NVIDIA/{print $1}' | xargs -i sh -c 'echo {}; lspci -s {} -vvv | grep -i LnkSta:' lspci -nn | sed -r -n '/VGA.*NVIDIA/s@(.*) VGA.*\[(.*)\].*\[(.*):(.*)\].*@\1 \3 \4 #\2@gp' 02:00.0 10de 1e89 #GeForce RTX 2060

GRUB_CMDLINE_LINUX="modprobe.blacklist=xhci_hcd"

dracut重新生成initramfs

echo force_drivers+=\" vfio vfio_iommu_type1 vfio_pci vfio_virqfd\" > /etc/dracut.conf.d/10-vfio.conf #dracut --force --add-drivers "ses hpsa megaraid megaraid_sas mpt3sas mpt2sas aacraid smartpqi" -f --kver dracut -f --kver `uname -r` #dracut -f /boot/initramfs-$(uname -r).img $(uname -r)

所以在制作镜像时的内核模块要配置:

GRUBCMDLINELINUX=”ixgbe.allowunsupportedsfp=1“

Openstack API使用案例

创建带gpu属性实例类型

openstack flavor create c2m4d10g1 \ --vcpus 2 --ram 4096 --disk 10 \ --property "pci_passthrough:alias"="geforce_rtx_4090:1" openstack flavor create c100m800d500g8-rtx_4090 \ --vcpus 100 --ram 819200 --disk 500 \ --property "pci_passthrough:alias"="geforce_rtx_4090:8, audio:1"

[pci] passthrough_whitelist = [{"vendor_id":"10de", "product_id":"22ba"}, {"vendor_id":"10de", "product_id":"2684"}] alias = {"name": "geforce_4090","vendor_id":"10de", "product_id":"2684", "device_type":"type-PCI"} alias = {"name": "audio_4090","vendor_id":"10de", "product_id":"22ba", "device_type":"type-PCI"}

命令行创建虚拟机实例

#!/bin/sh readonly domain="zhy2" readonly uuid=$(uuidgen | cut -d- -f1) # 需要指定主机名 host=$1 [ -z $host ] && echo "$0 cpu-xx" && exit 0 host=$(echo $host|awk -F. '{print $1}') cat > clouduser.txt<<EOF #cloud-config chpasswd: list: | root:upyunxxxx expire: False users: - name: root ssh_authorized_keys: - ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQC+DMdQ+IKj1TWVjp0qe+R9cQ5HhSIinr+oJx8FoInVWsNParq4WxqXdRk4jJH/d3gFFXBgQwh2Ie6gF23KjS0O6nfNFhaN5AzcJaXPF+H9RIs6fsRmmK0Wdr6sq9W8//WTdD69MIS04u582XyUzayRy0WjCj/HoeHZFRHu8FtlcUfVvqosC0teUvEY9qRK0uehWU84lxONeJYJPq5JMU1NwJ/lnJrMkqCDBlTLcpCeNfcha4atxXvCk8QjYvRDDUc6dQlnnvWRoXF6xtOpWbcK/k1wyzQVRWP011KfBGdQ/gm8x4R9tfEwZMHUdtsgNdSot2HXIQjFqR2MtTWVkTQ1Tc8qbmTVG5CFZRT2YNPtlAsi/YQ4/9FmvBAespRBZjKI0rQX+zk8Z1oAWYvZQIxa7htqTOe0dcwXxu226ji5Ku2gD1BvCtSCGnfv6c/GpY6umo0//Vfd8IwQc54sbuAK1ms6rA7RcN1yLksfxlZry7wCuZJichNnJc+WbFxQtRGTYPi+QWG2iuj40Mh2+A323dK2G0B9bonvbmQjhPCfNOyTnwh8YfS+9PsfgRXc4hFDlVu+VaecnQXk+IxZMU4MITjF8PJa3F4/Vptaon/cZpdjSUXr7rylRRleVBOEWCtA2sBQxrjFC7z16nc6YqTPRLzmOQRuj6CGAAQqGsKriQ== shaohaiyang@gmail.com-yoga EOF #image="hashcat_gpu_hold" #image="debian12_nocloud" image="ubuntu2204" gpu_flavor="c2m4d20" gpu_zone="nova" # default: nova #gpu_zone="GPU-4090" # default: nova gpu_host=$(echo $host|awk -F. '{print $1".service."}')"$domain" # default: * #gpu_test_image=$(openstack image list | awk -F'|' '/'"$image"'/{print $2}') # 检查一下有没有这个实例,如果没有就创建实例 [ ! -s /tmp/.server_list ] && openstack server list > /tmp/.server_list grep "$domain-$host" /tmp/.server_list if [ $? != 0 ];then openstack server create $domain-$host-$uuid --flavor $gpu_flavor \ --image $image --availability-zone $gpu_zone:$gpu_host \ --network provider --user-data clouduser.txt rm -rf clouduser.txt fi # 如果没有卷信息,就导出卷 if [ ! -s /tmp/.volume_list ];then openstack volume list > /tmp/.volume_list fi openstack server list > /tmp/.server_list instance_id=$(grep "$domain-$host" /tmp/.server_list | awk '{print $2}') echo $instance_id openstack server list | awk '/ACTIVE/{split($8,a,"=");print $4,"ansible_ssh_host="a[2],"ansible_ssh_port=22"}' > list_vms for volume_id in $(awk '/'"$host"'-vdisk.*available/{print $2}' /tmp/.volume_list);do echo " ----------->> mount $volume_id << -------------" openstack server add volume $instance_id $volume_id done

[Unit] After=network-online.target [Service] Environment="SELINUX=disabled" ExecStart=/usr/local/bin/hashcat -m 1400 -a 3 1EE9146B48AE09BF64A572BF48D727B01D024EEEA614272E90DC9D480B59BC3E --force [Install] WantedBy=multi-user.target

命令行关闭计算节点服务

openstack compute service list openstack network agent list openstack hypervisor list openstack compute service set --disable ops-yoga-c2 nova-compute # 上面的虚拟机要热迁移或冷迁移掉,才能删除掉计算节点 openstack compute service delete 1e0b442c-d138-420.... # Example # curl -g -i -X PUT http://{service_host_ip}:8774/v2.1/{tenant_id}/os-services /force-down -H “Content-Type: application/json” -H “Accept: application/json ” -H “X-OpenStack-Nova-API-Version: 2.11” -H “X-Auth-Token: {token}” -d ‘{“b inary”: “nova-compute”, “host”: “compute1”, “forced_down”: true}’

重新发现和注册计算节点

# 控制节点上运行命令 su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Openstack网络配置和转发

#!/bin/bash # -h if [ -z "$1" ]; then echo -e "show: show floating ip list \nport: show vm port list\nfip [fip]: show fip port forwarding list\npfa [internal ip] [vm port] [vm ip port] [external port] [fip]\npfd [fip] [fip port forwaring id]" exit 1 fi case "$1" in "show") openstack floating ip list ;; "port") openstack port list ;; "fip") openstack floating ip port forwarding list $2 ;; "pfa") openstack floating ip port forwarding create --internal-ip-address $2 --port $3 --protocol tcp --internal-protocol-port $4 --external-protocol-port $5 $6 ;; "pfd") openstack floating ip port forwarding delete $2 $3 ;; *) echo "unknow command" ;; esac

Openstack数据库备份

✅ 数据库备份并保留N天

#!/bin/bash daytime=$(date +%Y%m%d%H) keepday=30 dirname=/var/log/yoga_sqlbackup_$daytime dbs="mysql keystone nova nova_api nova_cell0 placement cinder neutron" passwd="upyunxxxx" mkdir -p $dirname for db in $dbs; do mysqldump --skip-extended-insert -uroot -p$passwd $db > $dirname/$db.sql done tar zcvf $dirname.tgz $dirname rm -rf $dirname find /var/log/ -name yoga_sqlbackup*.tgz -a -ctime +$keepday -exec rm -rf {} \;

nova-cell0数据库的模式与nova一样,主要的作用就是当实例调度失败时,实例的信息将不属于任何一个cell ,因而存放到nova_cell0中,所以说cell0是存放数据调度失败的数据用来集中管理。

✅ 获取所有的虚拟机IP

mysql -uroot -p$passwd nova -e "select network_info from instance_info_caches where network_info!='[]'" > .instance.sql while read line;do echo $line | jq '.[0].network.subnets[0].ips[0].address' done < .instance.sql exit for i in $(grep geforce-rtx nova.sql | awk -F, '{print $6}');do echo $i mysql -uroot -p$passwd nova -e "delete from instance_extra where instance_uuid like $i;" done

虚拟机在线平滑热迁移

✅ 计算节点配置libvirt

vim /etc/libvirt/libvirtd.conf

listen_tls = 0 listen_tcp = 1 unix_sock_group = "root" unix_sock_rw_perms = "0777" auth_unix_ro = "none" auth_unix_rw = "none" log_filters="2:qemu_monitor_json 2:qemu_driver" log_outputs="2:file:/var/log/libvirt/libvirtd.log" tcp_port = "16509" listen_addr = "0.0.0.0" auth_tcp = "none"

vim /etc/sysconfig/libvirtd

LIBVIRTD_ARGS="--listen"

原因是

- 每台计算节点上的nova instance目录要一致!

- 每台计算节点和控制节点都有相同的/etc/hosts

重启libvirtd服务,再次进行迁移

🏆OpenStack 自动化实战

#!/bin/sh repo="yoga_repo.tgz" grep 114.114.114 /etc/resolv.conf [ $? == 0 ] || ( echo "nameserver 114.114.114.114" > /etc/resolv.conf) [ -s /root/$repo ] || curl devops.upyun.com/$repo -o $repo grep aliyun /etc/yum.repos.d/ -r -l [ $? == 0 ] || ( rm -rf /etc/yum.repos.d/* ; tar zxvf /root/$repo -C /) dnf makecache --refresh dnf install -y epel-release dnf install -y python3 sshpass wget nc lldpd vim-enhanced rsyslog supervisor pciutils chrony tar screen bind-utils --enablerepo=epel cat >> /root/.ssh/authorized_keys<<EOF ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC3K0pbD055f0dU/z2tQTG9RfnExjC9eKoBemxZCkQaiCHfPC/6XUEKReykZEzRLNEhI/0cOUshZjIHC9zjxHGfnJBPktjUvL4QvsA4qETk3T5uuy6PJx6P1ta4XaZy1mtEKh+Anqx8Q/w4ClrYiaUFEc62Gef+f4JUCCwlRqCvFcc5IoxNngFfUh2Wxp7hINyljVFNRcGBHs4cNSsxmE37ye+usJT98iafFi1A9SOwzUfhuB4zemNxTWoZpKNRf75gyHU+fOJ9FOuGveIPz7aoh2JLjltD1WrFnEZXsKnoftaiGaovrvO0ks++p73Q9y5o/9/9o59vcPcy7w16XgLT libo.huang@localhost.localdomain EOF dnf update -y

配置跳板机

安装python和加速pip

dnf install -y python3 python3-pip python3-libvirt sshpass libvirt --enablerepo=epel cat > /etc/pip.conf <<EOF [global] target = /usr/lib/python3.6/site-packages index-url = https://mirrors.aliyun.com/pypi/simple trusted-host = aliyun.com timeout = 120 EOF python3 -m pip install -U pip pip3 install -U pyOpenSSL pyyaml pip3 install ansible -t /usr/lib/python3.6/site-packages/

Libvirt 必须要升级到7.5以上,8.0(需要CA)以下的版本,否则挂载有bug.

dnf list --installed | awk '/libvirt/{print $1}'|xargs -i dnf remove -y {}

ansible简洁配置文件

mkdir -p /etc/ansible; cat >> /etc/ansible/ansible.cfg <<EOF [defaults] inventory = /root/list_hosts remote_tmp = $HOME/.ansible/tmp pattern = * forks = 5 poll_interval = 15 sudo_user = root transport = smart remote_port = 22 module_lang = C gathering = implicit host_key_checking = False sudo_exe = sudo timeout = 30 module_name = shell deprecation_warnings = False fact_caching = memory [ssh_connection] ssh_args = -o ControlMaster=auto -o ControlPersist=60s -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no [accelerate] accelerate_port = 5099 accelerate_timeout = 30 accelerate_connect_timeout = 5.0 accelerate_daemon_timeout = 30 EOF

ansible的主机列表

[control] ccm-01.service.iqn ansible_host=194.101.0.1 ccm-02.service.iqn ansible_host=194.101.0.2 ccm-03.service.iqn ansible_host=194.101.0.3 [network] ccn-01.service.iqn ansible_host=194.101.0.4 ccn-02.service.iqn ansible_host=194.101.0.5 [compute]

操作系统前期准备

GPU4090卡要开启persistent

sudo nvidia-smi -pm 1

如果要开机就开启持久化

cat > /lib/systemd/system/nvidia-persistenced.service <<EOF [Unit] Description=NVIDIA Persistence Daemon After=syslog.target [Service] Type=forking PIDFile=/var/run/nvidia-persistenced/nvidia-persistenced.pid Restart=always ExecStart=/usr/bin/nvidia-persistenced --verbose ExecStopPost=/bin/rm -rf /var/run/nvidia-persistenced/* TimeoutSec=300 [Install] WantedBy=multi-user.target EOF systemctl enable --now nvidia-persistenced

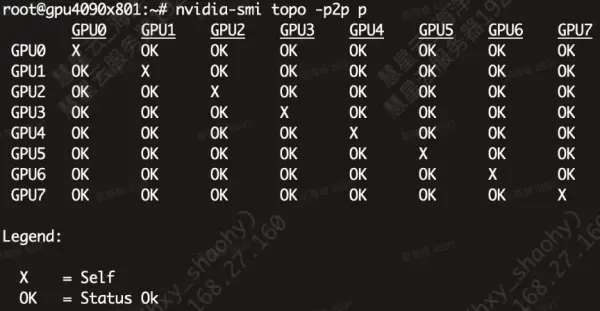

GPU4090卡开启P2P 🚀

传统多GPU系统通过PCIe总线经CPU中转进行数据传输,这种设计存在双重限制:

在ResNet-152等典型模型中,参数同步时间可能占据总训练时间的40%以上。这种架构缺陷在需要频繁交换数据的场景(如GNN图神经网络的多节点特征传递)中尤为致命。

P2P技术的革命性在于突破传统内存层级限制,允许GPU通过NVLink或PCIe Switch直接访问对等显存。

这种点对点通信模式带来三重优势:

删除驱动程序

sudo apt-get --purge remove "*nvidia*" sudo apt-get --purge remove "*cuda*" "*cudnn*" "*cublas*" "*cufft*" "*cufile*" "*curand*" "*cusolver*" "*cusparse*" "*gds-tools*" "*npp*" "*nvjpeg*" "nsight*" "*nvvm*" "*libnccl*" sudo apt install git cmake gcc g++

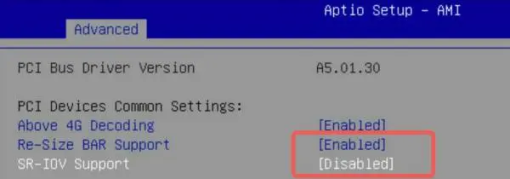

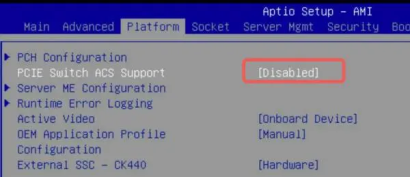

Bios设置

- 开启 Resize BAR

- 关闭 Intel Vd-T 或 AMD iommu

- 关闭 PCI ACS

关闭 Nouveau driver

echo blacklist nouveau > /etc/modprobe.d/blacklist-nouveau.conf echo options nouveau modeset=0 >> /etc/modprobe.d/blacklist-nouveau.conf sudo update-initramfs -u sudo reboot

安装开源驱动

wget -c https://us.download.nvidia.com/XFree86/Linux-x86_64/565.57.01/NVIDIA-Linux-x86_64-565.57.01.run #在安装时,选择无kernel 模组安装(因为后续P2P内核需要重新编译) sudo sh ./NVIDIA-Linux-x86_64-565.57.01.run --no-kernel-modules

定制魔改内核

从github链接中下载驱动对应的open内核版本(如果为其他版本可以下载后切换或者直接下ZIP)

git clone git@github.com:tinygrad/open-gpu-kernel-modules.git cd open-gpu-kernel-modules sudo ./install.sh

驱动测试P2P功能

替换镜像源站

# 修改为阿里源 sed -r -i -e 's|^mirrorlist=|#mirrorlist=|g' \ -e '/^baseurl/s|(.*releasever)/(.*)|baseurl=https://mirrors.aliyun.com/centos-vault/8.5.2111/\2|g' \ /etc/yum.repos.d/CentOS-*.repo dnf -y install epel-release dnf install -y wget nc lldpd vim-enhanced rsyslog supervisor pciutils chrony tar screen bind-utils --enablerepo=epel

virt-customize预配置qcow

yum install libguestfs-tools virt-customize -a centos8.qcow2 --root-password password:passw0rd \ --run-command "sed -i 's/^mirrorlist/#mirrorlist/g' /etc/yum.repos.d/*.repo" \ --run-command "sed -i 's|^#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|' /etc/yum.repos.d/*.repo" \ --install python3,python3-pip,epel-release,nc,wget,lldpd,vim-enhanced,rsyslog,chrony,tar,screen,bind-utils \ --delete /etc/yum.repos.d/epel-test*.repo \ --copy-in /etc/pip.conf:/etc/ \ --update --selinux-relabel

一键完整安装openstack包

#!/bin/sh RDO_URL="https://repos.fedorapeople.org/repos/openstack/archived/openstack-yoga/rdo-release-yoga-1.el8.noarch.rpm" POWERTOOLS=$(grep -i '\[powertools\]' /etc/yum.repos.d/*.repo | sed -r -n 's@.*\[(.*)\].*@\1@gp'|sort -u|head -1) VERSION="yoga" dnf config-manager --set-enabled $POWERTOOLS #dnf --enablerepo=$POWERTOOLS --enablerepo=openstack-$VERSION install -y # 安装rdo仓库 # 修改为阿里源 sed -r -i -e 's|^mirrorlist=|#mirrorlist=|g' \ -e '/^baseurl/s|(.*releasever)/(.*)|baseurl=https://mirrors.aliyun.com/centos-vault/8.5.2111/\2|g' \ /etc/yum.repos.d/CentOS-*.repo dnf install -y epel-release.noarch dnf install -y $RDO_URL pip3 install osc-placement for svc in httpd mod_ssl mariadb-server mariadb-server-galera rabbitmq-server memcache \ driverctl tar wget nc nmap lvm2 lldpd rsyslog pciutils chrony screen vim-enhanced \ bind-utils libvirt ceph-common ebtables supervisor bridge-utils ipset iperf3 htop \ haproxy device-mapper targetcli qemu-img python3-libvirt python3-rbd sysstat \ python3-keystone python3-mod_wsgi python3-openstackclient nmon ;do echo -e "${YELLOW_COL}-> Installing $svc ... ${NORMAL_COL}" dnf list installed | grep -iq $svc [ $? != 0 ] && dnf install -y $svc --enablerepo=epel systemctl disable --now $svc done for svc in keystone dashboard glance cinder placement-api neutron neutron-ml2 neutron-linuxbridge\ nova-api nova-metadata-api nova-conductor nova-novncproxy nova-scheduler nova-compute ;do svc="openstack-$svc" echo -e "${YELLOW_COL}-> Installing $svc ... ${NORMAL_COL}" dnf list installed | grep -iq $svc [ $? != 0 ] && dnf install -y $svc --enablerepo=epel systemctl disable --now $svc done yum update -y --nobest

更换网络服务

在安装部署OpenStack时,OpenStack的网络服务会与NetworkManager服务产生冲突,二者无法一起正常工作,需要使用Network

dnf install -y network-scripts --enablerepo=epel # 停用NetworkManager并禁止开机自启 systemctl unmask NetworkManager systemctl stop NetworkManager && systemctl disable NetworkManager # 启用 Network并设置开机自启 systemctl start network && systemctl enable network

手动时间网络同步

[root@ccm-01 ~]# timedatectl Local time: Fri 2024-01-26 18:14:39 CST Universal time: Fri 2024-01-26 10:14:39 UTC RTC time: Fri 2024-01-26 10:14:39 Time zone: Asia/Shanghai (CST, +0800) System clock synchronized: yes NTP service: active RTC in local TZ: no chronyc sources -v .-- Source mode '^' = server, '=' = peer, '#' = local clock. / .- Source state '*' = current best, '+' = combined, '-' = not combined, | / 'x' = may be in error, '~' = too variable, '?' = unusable. || .- xxxx [ yyyy ] +/- zzzz || Reachability register (octal) -. | xxxx = adjusted offset, || Log2(Polling interval) --. | | yyyy = measured offset, || \ | | zzzz = estimated error. || | | \ MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^- tick.ntp.infomaniak.ch 1 10 377 462 -7859us[-8245us] +/- 115ms ^+ time.neu.edu.cn 1 10 177 132 -22ms[ -22ms] +/- 28ms ^* time.neu.edu.cn 1 10 177 360 -26ms[ -26ms] +/- 34ms ^- ntp6.flashdance.cx 2 10 377 431 -29ms[ -30ms] +/- 91ms

Failed to set ntp: Failed to activate service 'org.freedesktop.timedate1': timed out (service_start_timeout=25000ms) Failed to set local RTC: Connection timed out

dnf reinstall -y mozjs60-60.9.0-4.el8.x86_64.rpm

内核中启用IP转发路由

在创建桥接器之前,让我们通过在运行时使用 .net 设置内核参数来启用 IP 路由sysctl。

tee /etc/sysctl.d/iprouting.conf<<EOF net.ipv4.ip_forward=1 net.ipv6.conf.all.forwarding=1 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_nonlocal_bind=1 EOF

磁盘检查和逻辑卷

mkdir -p /disk/nvme-disk pvcreate -ff -y /dev/nvme0n1 /dev/nvme1n1 vgcreate -y nvme-disk /dev/nvme0n1 /dev/nvme1n1 lvcreate -n nova-volume -L 2000G -y nvme-disk # 不一定需要创建这个,看数据盘要不要在 nvme上 #lvcreate -n cinder-volume --type raid0 --stripes 2 -l 100%free -I 128k nvme-disk #检验lvm创建成功 lvs -a -o +devices,segtype nvme-disk # 格式化分区并添加到/etc/fstab 挂载到 /disk/nvme-disk mkfs.ext4 -L /disk/nvme-disk /dev/nvme-disk/nova-volume sed -r -i '/nvme-disk/d' /etc/fstab blkid | awk '/disk-nova/{print $3"\t/disk/nvme-disk\t\text4\tdefaults\t0 0"}' >> /etc/fstab mount -a

# 这个raid0导致格式化太慢了,可能是个bug,还是用回默认的 #lvcreate -n nova-volume --type raid0 --stripes 2 -L 2000G -I 128k nvme-disk

编译内核支持vfio

计算节点要检测pcie有没有打开virtfio

ls -adl /sys/kernel/iommu_groups/* lspci -nn| sed -r -n '/VGA.*NVIDIA/s@.*\[(.*)\].*\[.*@\1@gp'|tr ' ' '_'|sort -u lspci -nn |awk '/NVIDIA/{split($1,a,".");print a[1]}'|sort -u lspci -nnv -s $gpuid | grep -iE "$id|Kernel driver"

搭建主机域名和服务发现

- CoreDNS+Consul做内外网域名自动发现/注册/健康检测/负载均衡

搭建基于consul和coredns的自动注册和发现服务,并检查consul的健康状态。

#查看成员: consul members -http-addr 127.0.0.1:8500 #查看各节点状态 consul operator raft list-peers -http-addr=127.0.0.1:8500 #查看主节点: curl 127.0.0.1:8500/v1/status/leader #配置consul主机名发现服务并测试成功 dig ccm-01.service.iqn @127.0.0.1 -p8600 #配置coredns做域名解析并测试成功 dig ccm-01.service.iqn

coredns配置

.:5353 { #新增以下一行Template插件,将AAAA记录类型拦截,返回空(NODATA) template IN AAAA . bind 192.168.0.1 100.100.8.1 acl { allow net 172.16.0.0/12 100.100.0.0/16 block } errors log stdout health localhost:8080 prometheus 127.0.0.1:9253 cache { success 30000 300 denial 1024 5 prefetch 1 1m } loadbalance round_robin rewrite continue { ttl regex (.*) 30 } rewrite name regex (.*).ngb1s3.dc.huixingyun.com ngb1i.dc.huixingyun.com rewrite name ngb1.dc.huixingyun.com ngb1i.dc.huixingyun.com forward service.ngb1 100.100.8.1:8600 100.100.8.2:8600 forward . 119.29.29.29 180.76.76.76 114.114.114.114 { except service.ngb1 } }

分布式数据库搭建

搭建分布式的galera数据库并初始化(先初始化一个数据库,然后同步复制也可以)

./easyStack_yoga.sh control_init UPDATE user SET Password=PASSWORD("xxx") WHERE User="root"; grant all privileges on *.* to root@'100.100.%' identified by 'xxx' with grant option; SHOW STATUS LIKE 'wsrep%'; SHOW GLOBAL STATUS LIKE '%aborted_connects%';

正常第一次启动集群,使用命令:galera_new_cluster

其他版本请另行参考。

MySQL+Galera集群参数调优

### shy_begin default-storage-engine = innodb innodb_file_per_table = on back_log = 10240 max_connections = 10240 thread_cache_size = 10240 max_connect_errors = 10240 thread_pool_idle_timeout = 7200 connect_timeout = 7200 net_read_timeout = 7200 net_write_timeout = 7200 interactive_timeout = 7200 wait_timeout = 7200 host_cache_size = 0 thread_pool_size = 1024 query_cache_size = 512M max_allowed_packet = 512M collation-server = utf8_general_ci character-set-server = utf8 bind-address = 10.33.66.1 port = 3308 ### shy_end # Enable wsrep wsrep_on=1 wsrep_cluster_name="yoga_wsrep_db" wsrep_provider=/usr/lib64/galera/libgalera_smm.so bind-address="10.33.66.1" wsrep_node_address="10.33.66.1" wsrep_cluster_address="gcomm://10.33.66.1,10.33.66.2,10.33.66.3" wsrep_provider_options="gmcast.listen_addr=tcp://10.33.66.1:4567; gcs.fc_limit = 2048; gcs.fc_factor = 0.99; gcs.fc_master_slave = yes" wsrep_retry_autocommit = 50 wsrep_slave_threads=300 wsrep_max_ws_rows = 0 wsrep_max_ws_size = 2147483647

- 整个集群关闭后,再重新启动,则打开任一主机,输入命令:

vim /var/lib/mysql/grastate.dat #GALERA savedd state version:2.1 uuid: 自己的cluster id seqno: -1 safe_to_bootstrap:0 修改 seqno:1 safe_to_bootstrap:1

重新启动集群命令:galera_new_cluster

检测脚本:

grep "New cluster view" /var/log/mariadb.log|awk -F: 'END { print $1":"$2":"$3 $6":"$7}'

- 其他节点:systemctl start mariadb (可能慢的原因是在rsync同步数据库文件)

- 检测数据库有没有严重的性能问题:

#!/bin/sh PASS="xxxxx" mysql -uroot -p$PASS -e "show full processlist" | awk '/Waiting for table flush/ || /UPDATE agents/ {print $1}'

分布式rabbitmq

搭建分布式的rabbitmq消息队列并检查健康

scp /var/lib/rabbitmq/.erlang.cookie node:/var/lib/rabbitmq/.erlang.cookie rabbitmqctl stop_app rabbitmqctl reset rabbitmqctl forget_cluster_node ccm-01 rabbitmqctl join_cluster --disc rabbit@ccm-01 rabbitmqctl start_app rabbitmqctl set_policy ha-all "^ha\." '{"ha-mode":"all"}' rabbitmqctl cluster_status rabbitmqctl change_password guest $DBPASSWD rabbitmqctl set_permissions guest ".*" ".*" ".*" rabbitmq-plugins enable rabbitmq_management

如果原来集群中的一台机器已经掉线,重新加入时必须要先剔除节点:

rabbitmqctl forget_cluster_node "$node" rabbitmqctl cluster_status # 检查rabbitmq集群状态

for srv in mariadb@ memcached rabbitmq-server keepalived;do sed -r -i '/^After/s^$^ rc-local.service^g' /usr/lib/systemd/system/$srv.service done

EasyStack自动化部署

指定版本的RDO

dnf -y install https://repos.fedorapeople.org/repos/openstack/openstack-yoga/rdo-release-yoga-1.el8.noarch.rpm dnf install -y network-scripts wget nc lldpd vim-enhanced rsyslog supervisor pciutils chrony tar screen bind-utils --enablerepo=epel

dnf config-manager --set-disabled epel dnf config-manager --set-enabled powertools

下载脚本并配置

wget -c http://xxxx.upyun.com/easyStack_yoga.sh chmod +x /root/easyStack_yoga.sh ./easyStack_yoga.sh # 会询问一些问题,动态生成easystackrc文件 HOSTNAME="ccm-01.service.iqn" NODE_TYPE="control" # network or compute REGION="Region021" CCVIP=db.service.iqn MY_IP=100.100.62.3 VIRT_TYPE="kvm" PROVIDER_INTERFACE="ens118f0" GLERA_SRV="100.100.62.1,100.100.62.2,100.100.62.3" MEMCACHES="db.service.iqn:11211" STORE_BACKEND=ceph NOVA_URL="http://db.service.iqn:8774/v2.1" IMAGE_URL="http://db.service.iqn:9292/v2" VOLUME_URL="http://db.service.iqn:8776/v3" NEUTRON_URL="http://net.service.iqn:9696" PLACEMENT_URL="http://db.service.iqn:8778" KEYS_AUTH_URL="http://db.service.iqn:5000/v3" KEYS_ADMIN_URL="http://db.service.iqn:35357/v3" # 然后运行优化脚本 ./easyStack_yoga.sh adjust_sys

ansible all -m copy -a "src=authorized_keys dest=/root/.ssh/"

如果是KVM虚拟化,还需要在nova.conf设置:

libvirt.cpu_mode = (custom, host-model, host-passthrough)

openstack节点角色配置

控制集群部署

控制集群三台都要依次安装所有服务

./easyStack_yoga.sh keys_init ./easyStack_yoga.sh gls_init ./easyStack_yoga.sh cinder_init ./easyStack_yoga.sh nova_init # 初始化数据库和控制类服务 ./easyStack_yoga.sh neutron_init # 只初始化数据库 ./easyStack_yoga.shprobe_hypervisor # 控制节点要自动同步fernet

初始化fernet秘钥并同步到各控制节点

# 选定任意控制节点做fernet秘钥初始化,在/etc/keystone/生成相关秘钥及目录 keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

# 向controller02/03节点同步秘钥 #!/bin/sh for ccm in ccm-02 ccm-03;do rsync -avz -e "ssh" /etc/keystone/ $ccm:/etc/keystone/ rsync -avz -e "ssh" /var/lib/keystone/ $ccm:/var/lib/keystone/ done

# 同步后,注意controller02/03节点上秘钥权限 chown keystone:keystone /etc/keystone/credential-keys/ -R chown keystone:keystone /etc/keystone/fernet-keys/ -R

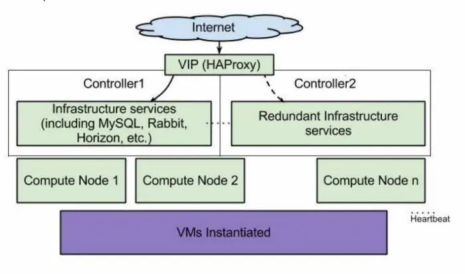

API无状态类服务,如:

- nova-api: 负责接受和响应外部请求,兼容EC2 API

- nova-conductor:访问数据库的中间件

- nova-scheduler:用于云主机调度

- nova-novncproxy:VNC代理

- glance-api: 响应发现、注册及搜索虚拟机映像文件

- keystone-api:负责身份验证、服务规则和服务令牌的功能

- neutron-server: 主要负责对外提供openstack网络服务API及扩展等

- neutron-api: 用于接受API请求后调用Plugin创建网络,子网,路由器等

可以由 HAProxy/Nginx + KeepAlived的VIP来提供HA负载均衡,

将请求按照一定的算法转到某个节点上的 API 服务

有状态服务,包括MySQL数据库和AMQP消息队列。对于有状态类服务的HA,如:

- neutron-l3-agent / neutron-linuxbridge-agent :位于这些节点上的Agents才是与网络相关命令的实际执行者

- neutron-metadata-agent:负责将接收到的获取metadata的请求转发给nova-api(metadata进程)

- nova-compute: 负责维护和管理云环境的计算资源,同时管理虚拟机生命周期

- cinder-volume等

最简便的方法就是多节点部署(consul自动注册发现)

网络集群部署

网络集群两台要依次安装neutron服务

./easyStack_yoga.sh neutron_init | neutron_start | neutron_restart # 启动网络服务

计算节点部署

计算节点要安装nova服务

./easyStack_yoga.sh nova_init | nova_start | nova_restart # 启动计算资源类服务

检查服务是否正常

ss -tnplu | grep -E ":80 |3306|8774|8776|9696|11211|15672|5000|35357"| awk '{print $1,$2,$5}' # 80 httpd # 3306 mysql # 8774 nova # 8776 cinder # 9292 glance # 9696 neutron # 5000, 35357 keystone # 11211 memcache # 15672 rabbitmq

Ceph创建必需的存储池

ceph osd pool create volumes 128 128 replicated rep_ssd ceph osd pool create backups 128 128 replicated rep_ssd ceph osd pool create vms 128 128 replicated rep_ssd ceph osd pool create images 64 64 replicated rep_ssd

Glance和Cinder启用ceph认证

ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images' ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=images' ceph auth get-or-create client.cinder-backup mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=backups' ceph auth get-or-create client.cinder > /etc/ceph/ceph.client.cinder.keyring ceph auth get-or-create client.cinder-backup > /etc/ceph/ceph.client.cinder-backup.keyring ceph auth get-or-create client.glance > /etc/ceph/ceph.client.glance.keyring chown cinder.cinder /etc/ceph/*cinder* chown glance.glance /etc/ceph/*glance*

Cinder开启ceph和lvm双后端

enabled_backends = ceph,lvm [ceph] volume_driver = cinder.volume.drivers.rbd.RBDDriver rbd_pool = volumes rbd_ceph_conf = /etc/ceph/ceph.conf rbd_flatten_volume_from_snapshot = false rbd_max_clone_depth = 5 rbd_store_chunk_size = 4 rados_connect_timeout = -1 rbd_user = cinder rbd_secret_uuid = 85b90a2a-3072-4fef-b4cb-17de9346a4ea volume_backend_name=ceph-gpu-01 [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = ssd1 volume_backend_name = lvm-gpu-01 target_helper = lioadm

- 安装targetcli软件和开启iscsid, target服务

- 创建iscsi iqn,写入 /etc/iscsi/initiatorname.iscsi

- cinder service-list → openstack volume service list

if [ ! -L /var/lib/cinder/conversion ] ;then mv /var/lib/cinder/conversion /disk/ssd1/ mkdir -p /disk/ssd1/conversion chown -R cinder.cinder /disk/ssd1/conversion ln -snf /disk/ssd1/conversion /var/lib/cinder/conversion fi

Cinder使用NVMe-oF提升性能

- 设置 NVMe/RDMA 控制器在mlx5_ib上启用RDMA (like RoCE or iWARP)

#!/bin/bash readonly TYPE="rdma" # tcp DISK="/dev/nvme0n1" MY_IP=$(ip a | awk '/inet.*100.100.*internal/{split($2,a,"/");{print a[1]}}'|head -1) NAME="nvmeof_$TYPE" for mod in nvmet nvmet-tcp nvmet-rdma nvme-fabrics; do modprobe $mod done TT=/sys/kernel/config/nvmet/subsystems/$NAME if [ ! -s $TT/attr_allow_any_host ]; then mkdir -p $TT/namespaces/1 echo -n $DISK > $TT/namespaces/1/device_path echo 1 > $TT/attr_allow_any_host echo 1 > $TT/namespaces/1/enable fi PP=/sys/kernel/config/nvmet/ports/1 if [ ! -s $PP/addr_traddr ]; then mkdir -p $PP echo $TYPE > $PP/addr_trtype echo ipv4 > $PP/addr_adrfam echo $MY_IP > $PP/addr_traddr echo 4420 > $PP/addr_trsvcid fi ln -s /sys/kernel/config/nvmet/subsystems/$NAME $PP/subsystems/$NAME echo " -t $TYPE -a $MY_IP -s 4420 " > /etc/nvme/discovery.conf if [ ! -s /etc/nvme/hostnqn ];then nvme gen-hostnqn > /etc/nvme/hostnqn uuidgen > /etc/nvme/hostid fi

然后在cinder.conf启用nvme-pool后端

[nvme-pool] image_volume_cache_enabled = true image_volume_cache_max_size_gb = 1000 image_volume_cache_max_count = 100 target_protocol = nvmet_rdma target_helper = nvmet target_ip_address = $my_ip target_port = 4420 volume_group = nvme-disk volume_backend_name = nvme-pool

如果服务器非正常重启,会出现挂载磁盘的虚拟机开启不正常,先查询挂载卷ID

openstack server show 7c8f3d4e-xxxx -c volumes_attached

登录数据库,手动解除关联:

update block_device_mapping SET deleted=1 WHERE instance_uuid="7c8f3d4e-xxx" and volume_id="8a532442-xxx";

Cinder开启数据加密

部署负载均衡和高可用性

Octavia OpenStack LBaaS

高可用性keepalived

负载均衡haproxy

Kolla容器化部署

pip3 install git+https://opendev.org/openstack/kolla-ansible@stable/zed git clone --branch stable/zed https://opendev.org/openstack/kolla pip install ./kolla

DevStack自动化部署

下载指定版本的devstack

dnf install -y git git clone -b stable/victoria https://opendev.org/OpenStack/devstack

创建stack用户

useradd -s /bin/bash -d /opt/stack -m stack echo "stack ALL=(ALL) NOPASSWD: ALL" | sudo tee /etc/sudoers.d/stack chmod -R 755 /opt/ # 切换到stack用户 su - stack

详解local.conf

[[local|localrc]] WSGI_MODE=mod_wsgi LIBVIRT_TYPE=qemu SERVICE_IP_VERSION=4 ADMIN_USER=admin ADMIN_PASSWORD=upyunxxxx DATABASE_PASSWORD=$ADMIN_PASSWORD RABBIT_PASSWORD=$ADMIN_PASSWORD SERVICE_PASSWORD=$ADMIN_PASSWORD GIT_BASE=http://git.trystack.cn NOVNC_REPO=http://git.trystack.cn/kanaka/noVNC.git SPICE_REPO=http://git.trystack.cn/git/spice/spice-html5.git HOST_IP=10.0.6.40 SERVICE_HOST=$HOST_IP MYSQL_HOST=$HOST_IP RABBIT_HOST=$HOST_IP GLANCE_HOSTPORT=$HOST_IP:9292 KEYSTONE_AUTH_HOST=$HOST_IP KEYSTONE_SERVICE_HOST=$HOST_IP Q_HOST=$HOST_IP # Neutron ML2 with OpenVSwitch Q_PLUGIN=ml2 Q_AGENT=openvswitch ENABLE_TENANT_VLANS=True ML2_VLAN_RANGES=physnet1:1000:2000 NEUTRON_CREATE_INITIAL_NETWORKS=False ## OpenStack云实例使用的FloatingIP的范围 FLOATING_RANGE="203.0.113.0/24" Q_FLOATING_ALLOCATION_POOL=start=203.0.113.5,end=203.0.113.200 FIXED_RANGE="10.0.6.200/28" FIXED_NETWORK_SIZE=200 IDENTITY_API_VERSION=3 OS_IDENTITY_API_VERSION=3 OS_AUTH_URL="http://$KEYSTONE_AUTH_HOST/identity/" # Reclone each time RECLONE=no DOWNLOAD_DEFAULT_IMAGES=False IMAGE_URLS=http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img # Enabling Neutron (network) Service disable_service n-net tempest enable_service neutron

软件运行

devstack.sh 若执行成功,会在当前主机内,根据 local.conf 文件中的配置信息,安装指定的子模块,若 local.conf 中没有指定模块,则会安装所有子模块。

以 stack 用户执行以下命令,使用管理员登录 OpenStack 客户端。

source openrc admin admin # 查看各服务状态 sudo systemctl status "devstack@*" # 查看服务列表 nova service-list # 清理down的计算服务列表 nova service-list | awk '/nova.down/{print $2}' | xargs -i nova service-delete {} # 执行以下命令,可以获取镜像资源列表。 openstack image list # 执行以下命令,可以获取网络资源列表。 openstack network list # 执行以下命令,可以获取虚拟机配置类型列表。 openstack flavor list # 创建虚拟机。 openstack server create --flavor m1.nano --image cirros-0.5.1-x86_64-disk --nic net-id=网络名称或ID --security-group 安全组名称或ID 实例名称 # 查看虚拟机状态。 openstack server list systemctl restart httpd && systemctl ebable httpd

常见安装错误

./unstack.sh ./clean.sh #and againt run ./stack.sh

✅ clouds_file missing 1 required positional argument: 'Loader'

YAML 5.1版本后弃用了yaml.load(file)这个用法,因为觉得很不安全,5.1版本之后就修改了需要指定Loader,通过默认加载器(FullLoader)禁止执行任意函数,该load函数也变得更加安全

用以下三种方式都可以

d1=yaml.load(file,Loader=yaml.FullLoader) d1=yaml.safe_load(file) d1 = yaml.load(file, Loader=yaml.CLoader)

✅ github因外力无法下载etcd

提前下载etcd相应版本,二进制安装即可

✅ Failed to discover available identity versions when contacting

WSGI_MODE=mod_wsgi # uwsgi感觉有bug,不推荐单独使用uwsgi

no uwsgi in

http_plugin.so python3_plugin.so No such file or directory

dnf install -y uwsgi uwsgi-plugin-python3 uwsgi-plugin-common --nobest

✅ Failure creating NET_ID for private

devstack 默认采用ml2+ovs的方式进行部署的时候,在创建network的时候需要先去取vlan id,但是这里没有配置VLAN RANGE,所以创建网络报错.

解决方法:在local.conf中增加VLAN RANGE的配置项,比如:

Q_PLUGIN=ml2 Q_AGENT=openvswitch ENABLE_TENANT_VLANS=True ML2_VLAN_RANGES=physnet1:100:4000

physical_network unknown for VLAN provider

✅ rabbitmq兼容性安装不了

dnf config-manager --set-enabled powertools dnf --enablerepo=powertools -y install rabbitmq-server

✅ Block Device Mapping is Invalid

dashboard中创建实例时,不要直接创建新卷,点击否,待实例创建完毕后再分配卷。

✅ Can not find requested image

[glance] api_servers = http://10.0.1.232:9292

在 nova.conf 的配置里这个地址不需要加 /v2,应该是个bug

✅ 新计算节点not mapped to any cell

nova-manage cell_v2 discover_hosts --verbose

journalctl -xeu openstack-nova-compute | grep -i error

检查nova依赖的ceph进程是否正常?

/usr/libexec/platform-python -s /usr/bin/ceph df --format=json --id cinder --conf /etc/ceph/ceph.conf

检查neutron-linuxbridge-agent的日志是否正常?

python源代码需要编译重新生效

重新将config.py文件重新编译,并替换原有的config.pyc文件

python -m py_compile config.py

✅ PortBindingFailed: Binding failed for port

journalctl -xeu openstack-nova-compute journalctl -xeu openstack-nova-api journalctl -xeu neutron-linuxbridge-agent 7月 26 08:52:15 ops-yoga-m1 neutron-linuxbridge-agent[1472536]: oslo_config.cfg.ConfigFilesPermissionDeniedError: Fa>

linuxbridge-agent.ini权限错误导致linuxbridge进程失败

✅ Host is blocked because of many connection errors

SET global max_connect_errors=10000; set global max_connections = 200; flush hosts;

或者在my.cnf中添加 hostcachesize = 0

✅ Deadlock: wsrep aborted transaction

经过一天一夜的排查和测试,做过如下的解决方案:

- 优化mariadb的参数,包括缓存大小和超时时间,性能有所提升但不能减少报错;

- 优化galera的参数,如wsrepslavethreads,提升性能但不能避免报错;

- 备份数据库,解除1/3台数据库,做单机部署并导入数据,并重置pymysql连接池,错误明显减少;

- Maria Galera集群不能同时被多个客户端连接,会产生冲突(神奇的集群模式,可能是没有优化好);

- 前面加了nginx和haproxy的负载均衡,测试结果,haproxy明显比nginx性能好得多(之前经验也是这样);

结论: 在galera集群前面分别添加haproxy负载均衡,保护好集群和健康检查

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 10240

user haproxy

group haproxy

daemon

defaults

mode tcp

log global

retries 10

timeout queue 10m

timeout connect 10m

timeout check 30s

listen mysqld-load

bind 10.33.66.1:3306

balance source

server mysqld0 10.33.66.3:3308 check weight 9

server mysqld1 10.33.66.2:3308 check weight 7

server mysqld2 10.33.66.1:3308 check weight 5

✅ nova虚机实例状态错误手工恢复vm_state

在日常管理中,经常出现比如物理机故障,Neutron或者Nova服务Down等等各种原因导致虚拟机状态,要么Error。要么处于一直Hard Reboot。或者Softe Reboot的状态,这个时候就要借助于Nova命令来解决了。

1、首先重置虚拟机状态,后面可以使用虚拟机名称或者ID。

nova reset-state 06d9d410-*********** nova reset-state --active 06d9d410-***********

2、停机虚拟机

nova stop 06d9d410-***********

3、启动虚拟机

nova start 06d9d410-*********** nova reboot --hard 06d9d410-***********

✅ cinder 卷状态重置和强制卸载

cinder reset-state --state available xxx cinder reset-state --state available --attach-status detached xxx

✅ 浮动IP做端口转发到虚拟机

✅ gre和vxlan二次封装数据包的MTU大小

VXLAN 模式下虚拟机中的 mtu 最大值为1450,也就是只能小于1450,大于这个值会导致 openvswitch 传输分片,进而导致虚拟机中数据包数据重传,从而导致网络性能下降。GRE 模式下虚拟机 mtu 最大为1462。

计算方法如下:

vxlan mtu = 1450 = 1500 – 20(ip头) – 8(udp头) – 8(vxlan头) – 14(以太网头) gre mtu = 1462 = 1500 – 20(ip头) – 4(gre头) – 14(以太网头)

可以配置 Neutron DHCP 组件,让虚拟机自动配置 mtu

dnsmasq_config_file = /etc/neutron/dnsmasq-neutron.conf vi /etc/neutron/dnsmasq-neutron.conf dhcp-option-force=26,1450

显示浮动floating ip

openstack floating ip list

显示虚拟机ip

openstack server list

显示端口port列表

openstack port list --server ${SERVER_ID} -c id -f value

创建一个端口转发

openstack floating ip port forwarding create --internal-ip-address 192.168.2.167 --port f3b67c8c-9f39-42ca-a0cd-f131121db8d4 --protocol tcp --internal-protocol-port 22 --external-protocol-port 60122 112.13.174.28

列举端口转发列表

openstack floating ip port forwarding list 1.2.3.4

no attribute ‘X509_V_FLAG_CB_ISSUER_CHECK‘

主要原因是系统当前的python和pyOpenSSL版本不对应

解决方法:

pip3 install -U pyOpenSSL

✅ libvirtError

pcie卡失效 no host valid

journalctl -xeu libvirtd | grep virPCIDeviceNew Feb 05 15:03:33 gpu-01.service.yiw libvirtd[1190917]: 1190983: error : virPCIDeviceNew:1478 : Device 0000:4e:00.0 not found: could not access /sys/bus/pci/devices/0000:4e:00.0/config: No such file or directory Feb 05 15:03:33 gpu-01.service.yiw libvirtd[1190917]: 1190983: error : virPCIDeviceNew:1478 : Device 0000:4e:00.1 not found: could not access /sys/bus/pci/devices/0000:4e:00.1/config: No such file or directory lspci -v -s 0000:4e:00 | grep 'Physical Slot' Physical Slot: 2-1 #断电 echo 0 > /sys/bus/pci/slots/2-1/power echo 1 > /sys/bus/pci/slots/2-1/power

Secret not found: no secret with matching uuid

cat > /root/ceph_secret_virsh.xml <<EOF <secret ephemeral='no' private='no'> <uuid>$MY_UUID</uuid> <usage type='ceph'> <name>client.cinder secret</name> </usage> </secret> EOF for node in $(openstack hypervisor list -c "Hypervisor Hostname" -f value);do scp /etc/ceph/client.cinder.keyring $node:/etc/ceph/ scp /root/ceph_secret_virsh.xml $node:/root/ echo -en "${YELLOW_COL} ------------- Virsh Patch $node -------------${NORMAL_COL}\n" ssh $node "virsh secret-define --file /root/ceph_secret_virsh.xml; virsh secret-set-value --secret $MY_UUID --base64 \$(awk '/key/{print \$NF}' /etc/ceph/client.cinder.keyring) ; virsh secret-list" done

✅ 数据库里清除虚拟机和镜像信息

#!/bin/sh PASS="upyunxxxx" UUID=$1 cls_nova(){ DBS="nova" UUID=$1 for DB in $DBS;do CMD="mysqldump -uroot -p$PASS --skip-extended-insert $DB" $CMD > $DB.sql TABLES=$(grep $UUID $DB.sql | awk '{print $3}'|sort -ur) for table in $TABLES;do table=$(echo $table|sed -r 's@`@@g') echo $table #mysql -uroot -p$PASS $DB -e "desc $table" if [ $table == "instance_id_mappings" -o $table == "instances" ];then key=uuid else key=instance_uuid fi mysql -uroot -p$PASS $DB -e "select count(*) from $table where $key=\"$UUID\"" #mysql -uroot -p$PASS $DB -e "SET FOREIGN_KEY_CHECKS=0; delete from $table where $key=\"$UUID\"; SET FOREIGN_KEY_CHECKS=1;" done done } cls_glance(){ DBS="glance" for DB in $DBS;do CMD="mysqldump -uroot -p$PASS --skip-extended-insert $DB" $CMD > $DB.sql TABLES=$(grep $UUID $DB.sql | awk '{print $3}'|sort -ur) for table in $TABLES;do table=$(echo $table|sed -r 's@`@@g') echo $table mysql -uroot -p$PASS $DB -e "desc $table" if [ $table == "images" ];then key=id else key=image_id fi mysql -uroot -p$PASS $DB -e "select count(*) from $table where $key=\"$UUID\"" #mysql -uroot -p$PASS $DB -e "SET FOREIGN_KEY_CHECKS=0; delete from $table where $key=\"$UUID\"; SET FOREIGN_KEY_CHECKS=1;" done done } cls_glance #cls_nova

✅ ConflictNovaUsingAttachment: Detach

Aug 12 11:31:28 ccn-01.service.yoga nova-compute[1340408]: 2023-08-12 11:31:28.608 1340408 ERROR nova.volume.cinder [req-4f63de2e-ee43-4d88-a191-768ee16b2ef0 7fabcbb084c84ec6ac89af7a85b3d6f2 7be91688344a4457af9e670e051089a9 - default default] Delete attachment failed for attachment 972faa64-0a35-40f4-ac76-d75ad866d0af. Error: ConflictNovaUsingAttachment: Detach volume from instance 6e942805-13b4-4297-a691-ed956b85cb01 using the Compute API (HTTP 409) (Request-ID: req-66735ff4-9cf4-43e8-bb8f-5cb4c5f0569d) Code: 409: cinderclient.exceptions.ClientException: ConflictNovaUsingAttachment: Detach volume from instance 6e942805-13b4-4297-a691-ed956b85cb01 using the Compute API (HTTP 409) (Request-ID: req-66735ff4-9cf4-43e8-bb8f-5cb4c5f0569d)

🆘 无法正常卸载的卷(detach),脚本回收

#!/bin/sh readonly passwd="upyunxxxx" # 导入 admin凭证 [ -s /var/lib/keystone/ks_rc_admin ] && source /var/lib/keystone/ks_rc_admin [ -s ~/.easystackrc ] && source ~/.easystackrc #for vol_id in a77d32d7-4a8f-4655-b3a6-58cf1726722e;do for vol_id in $(openstack volume list -f value -c ID -c Status | awk '/detaching/ || /reserved/ || /attaching/ {print $1}');do #instance_id=$(openstack --os-volume-api-version 3.37 volume attachment list -c "Server ID" -c "Volume ID" -f value | awk '/'"$vol_id"'/{print $NF}') #nova volume-detach instance_id volume_id echo "detached volume for $instance_id $vol_id" cinder reset-state --state available --attach-status detached $vol_id mysql -ucinder -p$passwd cinder -e "delete from volume_attachment where volume_id=\"$vol_id\"" mysql -unova -p$passwd nova -e "delete from block_device_mapping where volume_id=\"$vol_id\"" done for vol_id in $(openstack volume list -f value -c ID -c Status | awk '/ reserved/{print $1}');do echo "attached volume for $instance_id $vol_id" cinder reset-state --state in-use --attach-status attached $vol_id done

Glance image error

RADOS permission denied

查看cinder卷服务是否正常

openstack volume service list

volume Could not find any available weighted backend

看/var/log/cinder/下的日志,很有可能是 vg和lv名称或者尺寸不对

lvm长时间无法删除,重置状态也不行,cinder重启解决

sendserviceuser_token=True

✅ dashboard报错 : Invalid service catalog service

✅ Network partition detected

这是由于网络问题导致集群出现了脑裂临时解决办法:

Mnesia reports that this RabbitMQ cluster has experienced a network partition. There is a risk of losing data 在出现问题的节点上执行: sbin/rabbitmqctl stop_app 在出现问题的节点上执行: sbin/rabbitmqctl start_app

the scheduler has made an allocation against this compute node but the instance has yet to start

✅ ResourceProviderCreationFailed: Failed to create resource provider

下面是暴力解决方法:

排查掉 neutron-linuxbridge-agent 网络正常外,从nova compute 日志查看:无法创建新的资源提供者(uuid 冲突?)

那就先删除,再重新 probe_kvm

journalctl -xeu openstack-nova-compute -f #!/bin/sh # 配置颜色 readonly RED_COL="\\033[1;31m" # red color readonly GREEN_COL="\\033[32;1m" # green color readonly BLUE_COL="\\033[34;1m" # blue color readonly YELLOW_COL="\\033[33;1m" # yellow color readonly NORMAL_COL="\\033[0;39m" PASS="xxxxxx" cls_instance(){ [ -z $2 ] && echo -e "$0 $1 ${GREEN_COL}uuid ${NORMAL_COL}" && exit 0 ID=$2 DBS="nova" for DB in $DBS;do CMD="mysqldump -uroot -p$PASS --skip-extended-insert $DB" $CMD > $DB.sql echo $ID TABLES=$(grep $ID $DB.sql | awk '{print $3}'|sort -ur) echo $TABLES--------- for table in $TABLES;do table=$(echo $table|sed -r 's@`@@g') echo $table #mysql -uroot -p$PASS $DB -e "desc $table" if [ $table == "instance_id_mappings" -o $table == "instances" ];then key=uuid else key=instance_uuid fi #mysql -uroot -p$PASS $DB -e "select count(*) from $table where $key=\"$ID\"" mysql -uroot -p$PASS $DB -e "SET FOREIGN_KEY_CHECKS=0; delete from $table where $key=\"$ID\"; SET FOREIGN_KEY_CHECKS=1;" done done } cls_glance(){ [ -z $2 ] && echo -e "$0 $1 ${GREEN_COL}uuid ${NORMAL_COL}" && exit 0 ID=$2 DBS="glance" for DB in $DBS;do CMD="mysqldump -uroot -p$PASS --skip-extended-insert $DB" $CMD > $DB.sql TABLES=$(grep $ID $DB.sql | awk '{print $3}'|sort -ur) for table in $TABLES;do table=$(echo $table|sed -r 's@`@@g') echo $table" -------------------------" mysql -uroot -p$PASS $DB -e "desc $table" if [ $table == "images" ];then key=id else key=image_id fi #mysql -uroot -p$PASS $DB -e "select count(*) from $table where $key=\"$ID\"" mysql -uroot -p$PASS $DB -e "SET FOREIGN_KEY_CHECKS=0; delete from $table where $key=\"$ID\"; SET FOREIGN_KEY_CHECKS=1;" done done } cls_compute(){ [ -z $2 ] && echo -e "$0 $1 ${GREEN_COL}hostname ${NORMAL_COL}" && exit 0 ID=$2 DBS="nova nova_api placement" for DB in $DBS;do CMD="mysqldump -uroot -p$PASS --skip-extended-insert $DB" $CMD > $DB.sql TABLES=$(grep $ID $DB.sql | awk '{print $3}'|sort -ur) for table in $TABLES;do table=$(echo $table|sed -r 's@`@@g') if [ $table == "resource_providers" ];then key=name elif [ $table == "migrations" ];then key=source_compute else key=host fi echo $ID -- $DB -- $table -- $key mysql -uroot -p$PASS $DB -e "SET FOREIGN_KEY_CHECKS=0; delete from $table where $key=\"$ID\"; SET FOREIGN_KEY_CHECKS=1;" done done } case $1 in cls_glance) cls_glance $*;; cls_instance) cls_instance $*;; cls_compute) cls_compute $*;; *) echo -e "${RED_COL}$0 ${YELLOW_COL}cls_glance|cls_instance|cls_compute${NORMAL_COL}";; esac

🏆✅ 正确的清理计算节点和虚拟机的方式

openstack server list | awk '/tenant=/{print $2,$4}' > server.list #!/bin/sh while read host;do read -r tid t <<< $host h_host=$(openstack server show $tid | awk '/hypervisor_hostname/{print $(NF-1)}') grep -wq $h_host list_nouse if [ $? = 0 ];then echo "-----> delete $tid $t" #openstack server delete $tid fi done < server.list openstack compute service list|awk '/down/{print $2}'|xargs -i openstack compute service delete {} openstack network agent list|awk '/XXX/{print $2}'|xargs -i openstack network agent delete {} #!/bin/sh types="cinder-scheduler cinder-backup cinder-volume" for type in $types;do for node in $(openstack volume service list | awk '/'"$type"'.*gpu.*down/{print $4}');do echo "$type -> $node" openstack volume service set --disable $node $type cinder-manage service remove $type $node done done

🏆 ✅ 添加计算节点的流程

cpupower idle-set -D 0 cpupower frequency-set -g performance

- autoset_net.sh (都要) → 修改网络配置为QinQ模式(可选)

- 4batchadd_node.sh → 批量生成对应节点的easystackrc(控制器上生成推送,手动拷贝但麻烦)

- easyStack.sh adjust_sys (都要)

- easyStack.sh mkinitrd_vfio (计算节点) 重编内核 → dmesg|grep iommu -c

- 1makenvmelvm.sh (都要) → 配置LVM脚本

- 最好是重启机器生效(可选)

- 0corednssupervisord.sh → 安装coredns (主控)

- 2consulservice.sh 部署consul主机发现和域名解析 → (主控节点部署server,其它是agent→consul members)

- easyStack.sh control_init(主控:部署mysql集群,rabbitmq集群,memcache集群)

- 2consulservice.sh 部署consul做数据库和消息队列的服务发现(主控)

- easyStack.sh keys_init (主控) 注意:fernet秘钥并同步到各控制节点,重启 httpd

- easyStack.sh gls_init (主控)

- easyStack.sh cinder_init (都要)

- easyStack.sh nova_init (都要,主控奔奔有修改)

- easyStack.sh probe_gpu → nova.conf (都要)

- easyStack.sh neutron_init (都要)

- easyStack.sh probe_host(主控)

- 配置ceph分布式存储(主控节点部署server,其它是agent)

- easyStack.sh probe_ceph(主控)

- 开启keepalived(主控)

- 开启nvme rdma 添加开机脚本(都要)

- 修改vnc为外网地址

- 配置定时任务和健康检测(都要)

ceph中的rbd 配置 或 授权 (probe_ceph)是否正确?

必须要检查:

- bios里开启vt-d, numa, grub里pci=realloc

- nova(主控) / cinder / os_brick(zed) 要打补丁升级

- haproxy 转发端口不要冲突

- cpu/gpu 开实例测试流程是否正常

dmesg | grep -c iommu dmesg | grep -wE 'vfio_iommu_type1|realloc' grep -E 'novncproxy_base|passthrough_' /etc/nova/nova.conf grep -w service_plugins /etc/neutron/neutron.conf grep can_disconnect /usr/lib/python3.6/site-packages/os_brick/initiator/connectors/nvmeof.py

mkvnc(){ #ngb1.dc.huixingyun.com:6081/vnc_auto.html sed -r -i '/novncproxy_base_url/s@=.*@= https://xxxx.s.upyun.com/vnc_auto.html@g' /etc/nova/nova.conf systemctl daemon-reload systemctl restart openstack-nova-compute }

获取虚拟实例的vnc链接:

nova get-vnc-console ad42e72d-59de-4907-9d7a-a6157a5b8e10 novnc