基于Consul服务自动发现和注册

参考资料

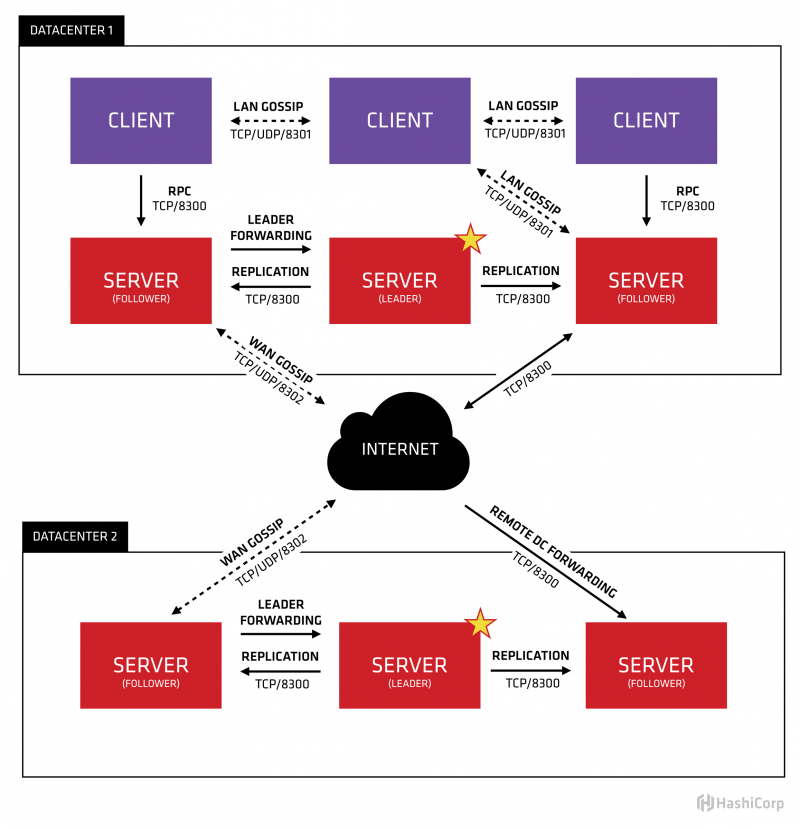

consul 默认使用下列端口

- 8300 (tcp): Server RPC,server 用于接受其他 agent 的请求

- 8301 (tcp,udp): Serf LAN,数据中心内 gossip 交换数据用于选举或添加新Server

- 8302 (tcp,udp): Serf WAN,跨数据中心 gossip 交换数据用于选举或添加新Server

- 8400 (tcp): CLI RPC,接受命令行的 RPC 调用

- 8500 (tcp): HTTP API 及 Web UI

- 8600 (tcp udp): 模拟DNS服务,可以把它配置到 53 端口来响应 dns 请求,使用 -dns-port=-1 可以禁用(agent不需要8600)

构建Consul三节点集群

容器化运行

consul members -http-addr 192.168.144.1:8500

consul operator raft list-peers -http-addr=192.168.13.229:8500

二进制运行配置

sudo yum install -y yum-utils sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo sudo yum -y install consul

#!/bin/sh IP=$(ip a|awk '/192.168.30/{split($2,a,"/");print a[1]}'|sort -u|head -1) install(){ yum install -y yum-utils yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo yum -y install consul } agent(){ cat > /etc/consul.d/consul.hcl <<EOF datacenter = "upyun" domain = "consul" data_dir = "/opt/consul" ui_config{ enabled = false } server = false enable_local_script_checks = true client_addr = "$IP" bind_addr = "$IP" node_name = "$IP" retry_join = ["192.168.30.17","192.168.30.18","192.168.30.19"] EOF } consul(){ rm -rf /etc/consul.d/consul.json sed -r -i 's@consul.json@consul.hcl@g' /usr/lib/systemd/system/consul.service cat > /etc/consul.d/consul.hcl <<EOF datacenter = "upyun" domain = "consul" data_dir = "/opt/consul" ui_config{ enabled = true } server = true bootstrap_expect=3 enable_local_script_checks = true leave_on_terminate = true rejoin_after_leave = true dns_config { enable_truncate = true, only_passing = true } client_addr = "$IP" bind_addr = "$IP" node_name = "$IP" retry_join = ["192.168.30.17","192.168.30.18","192.168.30.19"] EOF } service(){ systemctl daemon-reload for ss in cups cupsd rpcbind rpcbind.service rpcbind.socket;do systemctl disable $ss systemctl stop $ss done for ss in consul;do systemctl enable --now $ss #systemctl restart $ss done } consul_service(){ cat > /etc/consul.d/$1.hcl <<EOF service { name = "$1" check = { tcp = "$IP:$2" interval = "10s" timeout = "1s" } } EOF curl --request PUT http://$IP:8500/v1/agent/reload } consul_hostname(){ port=$(ss -tnpl|awk '/sshd/{split($4,a,":");if(a[2]!="") print a[2]}') hostname=$(hostname) cat > /etc/consul.d/hostname.hcl <<EOF service { name = "${hostname%.service.yoga}" check = { tcp = "$MY_IP:$port" interval = "10s" timeout = "1s" } } EOF curl --request PUT http://$MY_IP:8500/v1/agent/reload } change_dns(){ cat >/etc/resolv.conf<<EOF options timeout:1 attempts:1 single-request-reopen rotate nameserver 10.33.66.1 nameserver 10.33.66.2 nameserver 10.33.66.3 nameserver 10.33.66.4 nameserver 10.33.66.5 EOF ls /etc/sysconfig/network-scripts/ifcfg-eth*|xargs sed -r -i '/PEERDNS=/s@=.*@=no@g' } get_name_ip(){ IP=$(ip a|sed -r -n '/LOWER_UP/{n;n;p}'|awk '/inet/{split($2,a,"/");{if(a[1] ~ "192.168.30.") print a[1]}}') echo $IP `hostname` } #service #find /opt/consul/ -type f|xargs rm -rf #consul #agent #consul_service kafka 9092 #consul_service es 9201 #systemctl daemon-reload #systemctl restart consul #change_dns get_name_ip

服务发现及健康检测 HCL

service {

name = "mysql"

check = {

tcp = "192.168.30.7:3306"

interval = "10s"

timeout = "1s"

}

}

Consul命令参数

acl 与 Consul 的ACL进行交互 agent 运行一个 Consul agent catalog 与 Consul 的目录进行交互 config 与 Consul 的配置进行交互 connect 与 Consul 的Connect子系统进行交互 debug 记录 Consul operators 的调试归档 event 触发一个新事件 exec 在 Consul 节点上执行命令 force-leave 强制一个集群成员进入离开状态,一般用来强制删除发生故障或已关闭且没有正常离开的节点 info 对 Consul operators 提供调试的信息 intention 与通过Connect对服务的访问控制交互 join 将 Consul agent 加入集群 keygen 生成新的加密密钥 keyring 检查和修改 gossip 池中使用的加密密钥 kv 与 k/v 存储进行交互 leave Consul agent 正常离开集群并关闭 lock 在 k/v 存储中的给定前缀处创建一个锁 login 使用所请求的auth方法将提供的第三方凭据交换为新创建的 Consul ACL令牌 logout 销毁从 consul login 命令中创建的 Consul 令牌 maint 提供对节点或服务的维护模式的控制 members 列出 Consul 集群的所有成员 monitor 用于连接并追踪正在运行的 Consul agent 的日志 operator 为 Consul operators 提供集群级别的工具 reload 触发 agent 配置文件的重载 rtt 估计两个节点之间的网络往返时间 services 与注册的服务进行交互 snapshot 保存、还原和检查 Consul Server 状态以进行灾难恢复 tls 内置帮助创建Consul TLS的CA和证书 validate 对 Consul 的配置文件或目录执行完整性测试 version 显示 Consul 版本信息 watch 监视 Consul 特定数据视图(节点列表,服务成员,k/v等)中的更改

Consul配置参数

- -join consul.service.upyun

- -enable-script-checks

- -server:代表是Server模式,如果没有-server就代表是Client模式;

- -bootstrap-expect=5:此标志提供数据中心中预期服务器的数量。该标志需要-server模式;

- -datacenter:指定数据中心的名称;

- -config-dir:指定配置文件目录,Consul会自动加载里面所有Json格式的配置文件(.json结尾);

- -data-dir:指定节点运行时数据状态保存的路径;

- -node:指定节点的名称,在集群中必须是唯一的,默认是主机名;

- -bind:指定绑定的地址,集群内的所有节点地址都必须正常集群内部通讯;

- -client:服务监听地址提供HTTP/DNS/RPC等服务,默认是127.0.0.1,所以外部不能访问,如果需要提供服务,将其指定为0.0.0.0

- -rejoin:忽略之前的断开,重新启动时会尝试加入集群;

- -retry-interval:尝试重新加入集群的间隔时间;

- -retry_join:[“10.201.102.198”,“10.201.102.199”,“10.201.102.200”];

- -start_join:一个字符数组提供的节点地址会在启动时被加入;

Consul Agent的运行

IPADDR="192.168.13.167" docker run -d --name consul-agent -h $IPADDR \ -p $IPADDR:8400:8400 \ -p $IPADDR:8500:8500 \ -p $IPADDR:8600:53/udp \ progrium/consul -join 192.168.13.250

部署registrator自动注册

CONSUL_IP="192.168.13.250" HOST_IP="192.168.13.167" docker run -d --name registrator \ -v /var/run/docker.sock:/tmp/docker.sock \ -h $HOST_IP gliderlabs/registrator \ consul://$CONSUL_IP:8500每个dockerd的节点都要运行,打通docker api和consul 8500之间联系

Consul 节点健康检查

#查看成员: consul members -http-addr 127.0.0.1:8500 #查看各节点状态 consul operator raft list-peers -http-addr=127.0.0.1:8500 #查看主节点: curl 127.0.0.1:8500/v1/status/leader #配置consul主机名发现服务并测试成功 dig ccm-01.service.iqn @127.0.0.1 -p8600 #配置coredns做域名解析并测试成功 dig ccm-01.service.iqn

Consul Server的增删

/usr/local/consul/conf/server.json

{

"server": true,

"node_name": "192.168.13.232",

"bind_addr": "192.168.13.232",

"client_addr": "192.168.13.232",

"datacenter": "upyun-consul",

"domain": "upyun",

"leave_on_terminate": false,

"skip_leave_on_interrupt": true,

"rejoin_after_leave": true,

"retry_join": [

"192.168.13.229:8301",

"192.168.13.250:8301",

"192.168.13.230:8301",

"192.168.13.231:8301",

"192.168.144.1:8301",

"192.168.144.2:8301"

]

}

[program:consul]

command = /usr/local/consul/consul agent

-config-dir /usr/local/consul/conf

-data-dir /disk/ssd1/consul/data

-log-level info

stopsignal = INT

autostart = true

autorestart = true

startsecs = 3

stdout_logfile_maxbytes = 104857600

stdout_logfile_backups = 3

stdout_logfile = /disk/ssd1/logs/supervisor/consul.info.log

stderr_logfile = /disk/ssd1/logs/supervisor/consul.error.log

Consul agent自动注册和健康检测

运行agent

[program:consul]

command = /usr/local/consul/consul

agent -node 192.168.147.15 -bind 192.168.147.15 -client 192.168.147.15

-datacenter upyun-consul -join consul.service.upyun

-config-dir /usr/local/consul/conf -data-dir /disk/ssd1/consul/data

-dns-port=-1 -enable-script-checks -log-level err

stopsignal = INT

autostart = true

autorestart = true

startsecs = 5

stdout_logfile_maxbytes = 104857600

stdout_logfile_backups = 3

stdout_logfile = /disk/ssd1/logs/supervisor/consul.info.log

stderr_logfile = /disk/ssd1/logs/supervisor/consul.error.log

要注册的服务

galera-mysql.json

{

"service": {

"name": "galera-mysql",

"checks": [

{

"tcp": "192.168.147.15:3300",

"interval": "10s",

"timeout": "1s"

}

]

}

}

ntpdate.json

{

"service": {

"name": "ntpdate",

"checks": [

{

"args": ["/usr/local/consul/script/check_udp.sh"],

"interval": "60s",

"timeout": "10s"

}

]

}

}

支持脚本外部检测

check_udp.sh

#!/bin/sh nc -z -u 127.0.0.1 123

测试Docker微服务容器

# 每个节点都可以启动,后面还会使用consul-template和nginx做负载均衡 docker run -d --name service2 -P jlordiales/python-micro-service # Docker指定环境变量运行 docker run -itd \ -e "SERVICE_NAME=simple" -e "SERVICE_TAGS=http,upyun" \ -p 8000:5000 jlordiales/python-micro-service

查询注册服务的元数据

curl 192.168.13.167:8500/v1/catalog/services curl 192.168.13.167:8500/v1/catalog/service/python-micro-service

-P 表示使用docker默认的随机端口

它通过register已经把docker信息同步到consul

模板化配置Consul-template

Consul-template API 功能语法

datacenters:在consul目录中查询所有的datacenters,{{datacenters}}

file:读取并输出本地磁盘上的文件,如果无法读取,则报错,{{file "/path/to/local/file"}}

key:查询consul中该key的值,如果无法转换成一个类字符串的值,则会报错,{{key "service/redis/maxconns@east-aws"}} east-aws指定的是数据中心,{{key "service/redis/maxconns"}}

key_or_default:查询consul中该key的值,如果key不存在,则使用指定的值代替,{{key_or_default "service/redis/maxconns@east-aws" "5"}}

ls:在consul中查询给定前缀的key的顶级域值,{{range ls "service/redis@east-aws"}} {{.Key}} {{.Value}}{{end}}

node:查询consul目录中的单个node,如果不指定node,则是当前agent的,{{node "node1"}}

nodes:查询consul目录中的所有nodes,你也可以指定datacenter,{{nodes "@east-aws"}}

service:查询consul中匹配的service组,{{service "release.web@east-aws"}}或者{{service "web"}},也可以返回一组HealthService服务{{range service "web@datacenter"}} server {{.Name}} {{.Address}}:{{.Port}}{{end}},默认值返回健康的服务,如果你想返回所有服务,则{{service "web" "any"}}

services:查询consul目录中的所有services,{{services}},也可以指定datacenter:{{services "@east-aws"}}

tree:查询consul中给定前缀的所有K/V值,{{range tree "service/redis@east-aws"}} {{.Key}} {{.Value}}{{end}}

# Edit config.json

# Set JSON key-value pair as a Value.

"location": {

"proxy_set_header": "{\"X-Real-IP\": \"$remote_addr\", \"X-Forwarded-For\": \"$proxy_add_x_forwarded_for\", \"X-Forwarded-Proto\": \"$scheme\", \"Host\": \"$host\"}"

}

# Edit consul template file

{{ range $key, $pairs := tree "location" | byKey }}

{{ range $pair := $pairs }}

{{ range $key, $value := printf "location/%s" $pair.Key | key | parseJSON }}

{{ $key }}{{ $value }}

{{ end }}

{{ end }}

{{ end }}

nginx配置文件

user nobody; worker_processes auto; #error_log logs/error.log info; events { worker_connections 10240; } http { include mime.types; default_type application/octet-stream; sendfile on; #tcp_nopush on; lingering_close off; keepalive_timeout 30; send_timeout 60; proxy_read_timeout 100; proxy_send_timeout 60; proxy_connect_timeout 10; proxy_next_upstream_timeout 50; proxy_buffer_size 8k; proxy_buffers 4 8k; proxy_busy_buffers_size 16k; proxy_max_temp_file_size 0; proxy_temp_path /dev/shm/proxy_temp_nginx 1 2; client_body_temp_path /dev/shm/client_body_temp_nginx 1 2; client_header_buffer_size 4k; large_client_header_buffers 8 16k; client_max_body_size 1024m; port_in_redirect off; open_log_file_cache max=2048 inactive=60s min_uses=2 valid=15m; #gzip on; server { listen 81 default_server; server_name _; location / { root html; index index.html index.htm; } location /stats { stub_status on; allow 192.168.0.0/16; deny all; auth_basic "upyun proxy status"; access_log off; auth_basic_user_file .htpasswd; } } ################################################################## include conf.d/*.conf; } ################################################################## stream { include conf.stream.d/*.conf; }

nginx.http.ctmpl

{{ range services }} {{ range service .Name }} {{ if in .Tags "upyun" }} {{if .Tags | contains "http"}} upstream {{.Name}} { least_conn; server {{.Address}} max_fails=3 fail_timeout=60 weight=1; } server { listen {{.Port}}; server_name {{.ID}}; error_log /opt/nginx/logs/{{.ID}}.error.log; charset utf-8; location / { proxy_pass http://{{.Name}}; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; } } {{end}} {{end}} {{end}} {{end}}

marathon.kv.ctmpl

{{range tree "/marathon/"}}

{{if .Value|regexMatch "slave.*TASK_RUNNING"}}

{{.Value | plugin "/usr/local/bin/parse_json.sh" }}

{{end}}

{{end}}

{{ plugin "/usr/local/bin/marathon.sh" }}

parse_json.sh

#!/bin/sh

parse_json(){

echo "${1//\"/}" | sed -r -n "s^.*appId:/(.*),host:(.*),ports:\[(.*)\].*^\1@server \2:\3 max_fails=3 fail_timeout=60 weight=1;^p"

}

parse_json $1 >> /tmp/.marathon_upstream

exit 0

marathon.sh

#!/bin/sh

STRING2=

cat /tmp/.marathon_upstream | awk -F@ '{name[$1]=name[$1]"\n"$2} END{for(i in name) printf "upstream %s {\nleast_conn;%s\n}\n",i,name[i]}'

for name in `awk -F@ '{print $1}' /tmp/.marathon_upstream|sort -u`;do

STRING2+="server {\n\tlisten 88;\n\tserver_name $name;\n\terror_log /opt/nginx/logs/$name.error.log;\n\tcharset utf-8;\n\tlocation / {\n\t\tproxy_pass http://$name;\n\t\tproxy_set_header Host \$host;\n\t\tproxy_set_header X-Real-IP \$remote_addr;\n\t\tproxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;\n\t}\n}\n"

done

echo -e $STRING2

rm -rf /tmp/.marathon_upstream

exit 0

nginx.stream.ctmpl

{{ range services }} {{ range service .Name }} {{ if in .Tags "upyun" }} {{ if in .Tags "tcp" }} upstream {{.Name}} { least_conn; {{ .Address | plugin "/usr/local/bin/concatip.sh" }} } server { listen {{.Port}}; proxy_pass {{.Name}}; proxy_timeout 3s; proxy_connect_timeout 1s; } {{end}} {{ if in .Tags "udp" }} upstream {{.Name}} { least_conn; {{ .Address | plugin "/usr/local/bin/concatip.sh" }} } server { listen {{.Port}} udp; proxy_pass {{.Name}}; proxy_timeout 3s; proxy_connect_timeout 1s; } {{end}} {{end}} {{end}} {{end}}

plugin插件程序

#!/bin/sh for ip in `echo $1|sed -r 's^[;@#]^ ^g'`;do echo "server $ip max_fails=3 fail_timeout=60 weight=1;" done exit 0

运行consul-template

!/bin/sh CONSUL="192.168.13.250:8500" TEMPLATE_HTTP="/opt/nginx.http.ctmpl:/opt/nginx/conf/conf.d/http.conf:/opt/nginx/sbin/nginx -s reload" TEMPLATE_MARATHON="/opt/marathon.kv.ctmpl:/opt/nginx/conf/conf.d/marathon.conf:/opt/nginx/sbin/nginx -s reload" TEMPLATE_STREAM="/opt/nginx.stream.ctmpl:/opt/nginx/conf/conf.stream.d/stream.conf:/opt/nginx/sbin/nginx -s reload" ###docker run -p 88:80 -d --name nginx --volume /tmp/service.ctmpl:/templates/service.ctmpl --link consul:consul jlordiales/nginx-consul if [ -z "`pidof /opt/nginx/sbin/nginx`" ];then /opt/nginx/sbin/nginx -c /opt/nginx/conf/nginx.conf fi if [ -z "`pidof /usr/local/bin/consul-template`" ];then /usr/local/bin/consul-template \ -consul-addr $CONSUL \ -template "$TEMPLATE_HTTP" \ -template "$TEMPLATE_MARATHON" \ -template "$TEMPLATE_STREAM" fi

模块语法测试:

consul-template -consul-addr 192.168.13.169:8500 \

-template /root/test.ctmpl:/tmp/consul.result -dry -once

建议使用 supervisord 来托管服务!

也运行支持consul-template的nginx容器做测试

docker run -p 8080:80 -d --name nginx \

--volume /root/test.ctmpl:/templates/service.ctmpl \

--link consul:consul jlordiales/nginx-consul

Consul+Nginx反向代理

利用Consul提供服务注册与发现,支持Nginx/HAProxy作为反向代理,使用 Consul Template 或 Nginx Lua 模块动态更新代理配置,支持连接池和负载均衡。

Consul+ HAProxy做连接池管理

连接池不仅仅是一个性能优化工具,它更是一个至关重要的稳定性与弹性组件。它的核心优点可以归纳为:

- 快:通过连接复用,极大降低延迟。

- 稳:通过资源限制,防止自我和下游过载。

- 韧:通过健康检查和自动重连,自动从网络或服务中断中恢复。

- 简:通过抽象底层细节,简化应用开发。

在微服务和云原生架构中,几乎所有的中间件客户端(数据库、Redis、消息队列、HTTP客户端)都强烈推荐且默认就配备了连接池功能。正确配置和使用连接池,是构建高可用互联网应用的基本要求。

手动调整consul服务

CURL查询服务

curl -X GET http://consul.service.upyun:8500/v1/catalog/service/redis-new | jq '.[] | .Address'

[

{

"ModifyIndex": 1598391598,

"CreateIndex": 1598391598,

"ServiceEnableTagOverride": false,

"ServicePort": 0,

"ServiceAddress": "",

"ServiceTags": [],

"ID": "2744a688-cfe0-e968-4ffc-75afb7731838",

"Node": "192.168.13.250",

"Address": "192.168.13.250",

"Datacenter": "upyun-consul",

"TaggedAddresses": {

"wan": "192.168.13.250",

"lan": "192.168.13.250"

},

"NodeMeta": {

"consul-network-segment": ""

},

"ServiceID": "redis-new",

"ServiceName": "redis-new"

}

]

CURL注册服务

catalog注册服务

curl -v -X PUT http://$(ss -ntpl|grep :8500|awk '{print $4}')/v1/catalog/register \ -d '{ "Node": "192.168.13.248", "Address": "192.168.13.248", "Service": { "ID":"redis-master", "Service": "redis-master", "Port": 1028, "Address": "192.168.13.248", "Tags": ["redis","marco","upyun"] }, "Check": { "CheckID": "service:redis-master", "Name": "Redis health check", "Status": "passing", "ServiceID": "redis-master", "Definition": { "TCP": "192.168.13.248:1028", "Interval": "10s", "Timeout": "5s", "DeregisterCriticalServiceAfter": "30s" } }, "SkipNodeUpdate": false }' # 查询服务 curl -X GET http://consul.service.upyun:8500/v1/catalog/service/redis-master | jq . # 删除服务 curl -X PUT -d '{"Node":"192.168.13.248"}' http://consul.service.upyun:8500/v1/catalog/deregister

agent注册服务

curl -X PUT $(ss -ntpl|grep :8500|awk '{print $4}')/v1/agent/service/register \ -d '{ "ID": "repo.upyun.com", "Name":"repo-upyun-com", "Port": 5043, "Address": "192.168.13.250:5043", "Tags": ["http","upyun"] }' curl -X PUT \ 192.168.13.167:8500/v1/agent/service/register \ -d '{ "ID": "tcp-acs.upyun.com", "Name":"tcp-acs-upyun-com", "Port": 1234, "Address": "192.168.1.42:1234 ", "Tags": ["tcp","upyun"] }' curl -X PUT \ 192.168.13.167:8500/v1/agent/service/register \ -d '{ "ID": "udp-acs.upyun.com", "Name":"udp-acs-upyun-com", "Port": 1234, "Address": "192.168.1.42:1234 ", "Tags": ["udp","upyun"] }' #curl -v -X PUT http://192.168.13.167:8500/v1/agent/service/deregister/test1

CURL删除服务

curl -v -X PUT http://192.168.13.167:8500/v1/agent/service/deregister/{{.ID}} (repo.upyun.com)

CURL热加载服务

curl -X PUT http://$(ss -ntpl|grep :8500|awk '{print $4}')/v1/agent/reload

CURL强制剔除节点

curl -X PUT http://$(ss -ntpl|grep :8500|awk '{print $4}')/v1/agent/force-leave/192.168.12.14

CURL删除无效的服务

curl -X PUT http://$(ss -ntpl|grep :8500|awk '{print $4}')/v1/agent/service/deregister/nsq

常见故障排错

consul 集群无法加入新节点

主控节点上查询info 和 err 日志,发现:

memberlist: Failed push/pull merge: Node '192.168.14.72' protocol version (0) is incompatible

会导致 consul 集群保护起来,不再允许加入新的 agent 和server。

解决方法 找到无法加入的机器,重新删除 consul 下的 data 脏数据,再重新加入。