目录

Kubernets实战手册

参考资料

云原生技术的发展趋势正在朝着利用 Kubernetes 作为公共抽象层来实现高度一致的、跨云、跨环境的的应用交付而不断迈进。然而,尽管 Kubernetes 在屏蔽底层基础架构细节方面表现出色,它并没有在混合与分布式的部署环境之上引入上层抽象来为软件交付进行建模。我们已经看到,这种缺乏统一上层抽象的软件交付过程,不仅给降低了生产力、影响了用户体验,甚至还会导致生产中出现错误和故障。

今天,越来越多的应用研发团队期盼着这样一个平台:它既能够简化面向混合环境(多集群/多云/混合云/分布式云)的应用交付过程;同时又足够灵活可以随时满足业务不断高速变化所带来的迭代压力。然而,尽管平台团队很希望能帮上忙,但构建这样一个面向混合交付环境同时又高可扩展的应用交付系统,着实是一项令人生畏的任务。

KubeVela 通过以下设计,使得面向混合/多云环境的应用交付变得非常简单高效:

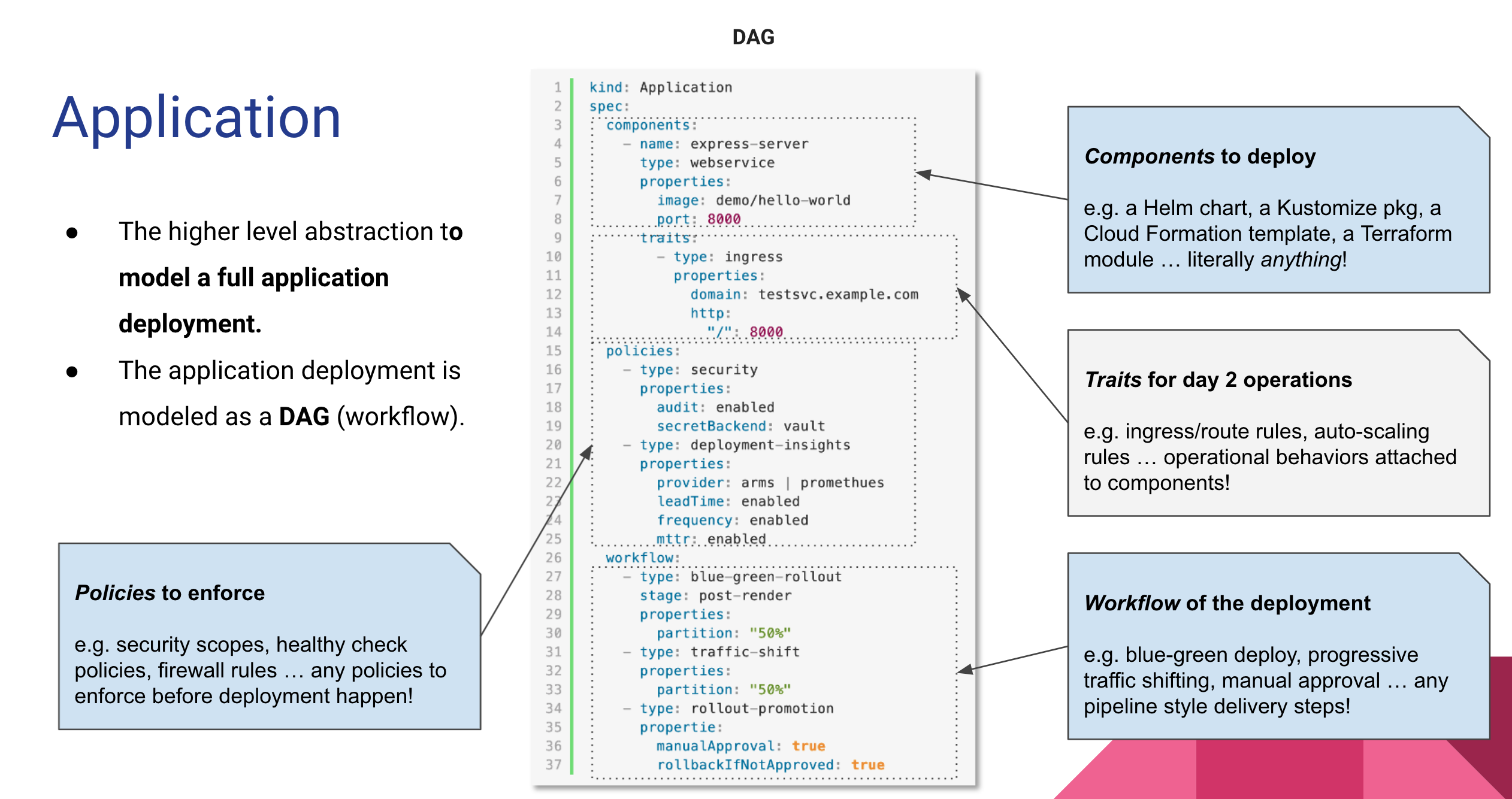

完全以应用为中心 - KubeVela 创新性的提出了开放应用模型(OAM)来作为应用交付的顶层抽象,并通过声明式的交付工作流来捕获面向混合环境的微服务应用交付的整个过程,甚至连多集群分发策略、流量调配和滚动更新等运维特征,也都声明在应用级别。用户无需关心任何基础设施细节,只需要专注于定义和部署应用即可。 可编程式交付工作流 - KubeVela 的交付模型是利用 CUE 来实现的。CUE 是一种诞生自 Google Borg 系统的数据配置语言,它可以将应用交付的所有步骤、所需资源、关联的运维动作以可编程的方式粘合成一个 DAG(有向无环图)来作为最终的声明式交付计划。相比于其他系统的复杂性和不可扩展性,KubeVela 基于 CUE 的实现不仅使用简单、扩展性极强,也更符合现代 GitOps 应用交付的趋势与要求。 基础设施无关 - KubeVela 是一个完全与运行时基础设施无关的应用交付与管理控制平面。所以它可以按照你定义的工作流与策略,面向任何环境交付和管理任何应用组件,比如:容器、云函数、数据库,甚至 网络和虚拟机实例等等。

视频教材

优化底层操作系统

- 裁剪(基础环境,必要组件)

- 优化(系统优化,网络优化,内核优化)

- 烧录(USB技巧,转存烧录)

数据中心的网络架构

核心软件的选型

- IPtables + fwmark (精通防火墙)

- Redis + Stunnel + Ignite (精通内存型KV的主从,集群,安全)

- Bash/Sed/AWK (精通shell编程三剑客)

- Nginx/Luajit (精通nginx+lua+tproxy) + Haproxy/TProxy (透明代理)

- ATS (haproxy/nginx-cache/squid/varnish/ats)(精通缓存代理系统)

- Ansible (精通自动化部署)

- ELK 或 ES(精通大数据日志分析)

- HDFS + MySQL + InfiniDB + Kafka + Redis + Presto + Calcite + AirPal(数据仓库实时分析)

- Prometheus + Zabbix + Grafana (精通运维监控指标及报警)

域名准备工作

DNS域名智能解析

CoreDNS性能优化

CoreDNS是一款新型的dns权威服务器

| 软件名 | 定位 | 优点 | 缺点 |

|---|---|---|---|

| bind/powerdns | 权威dns, 包括NS,A,CNAME,PTR,TXT | 可靠稳定,功能强大 | 代码古老,配置繁琐,性能不高 |

| dnsmasq | 缓存dns, 包括A,CNAME,TXT | 配置简单,可靠稳定 | 不能rewrite,性能不高 |

| unbound | 缓存dns, 包括A | 配置简单,性能高,支持tcp查询 | 可靠稳定(没测试),不能CNAME |

| coredns | 权威dns, 包括NS,A,CNAME,PTR,TXT | 配置超简单,可靠稳定, 性能高,无依赖(go) 支持插件体系, 支持etcd作为数据库条目 | 暂无 |

coredns.conf

.:253 { errors log stdout health localhost:8080 cache { success 30000 300 denial 1024 5 prefetch 1 1m } loadbalance round_robin #新增以下一行Template插件,将AAAA记录类型拦截,返回空(NODATA) template IN AAAA . rewrite continue { ttl regex (.*) 180 } rewrite stop name regex ^robin.x.upyun.com gw1.service.upyun answer name gw1.service.upyun robin.x.upyun.com rewrite stop { name regex ^(kuzan|vivi|slardar|rds).upyun.local {1}.service.upyun answer name ^(kuzan|vivi|slardar|rds).service.upyun {1}.upyun.local } file /opt/coredns/upyun.local.zone upyun.local proxy cdnlog-cn-hangzhou.aliyuncs.com 211.140.13.188 211.140.188.188 proxy service.upyun 192.168.13.250:8600 192.168.13.230:8600 { policy sequential fail_timeout 1s max_fails 3 spray protocol dns } forward . 119.29.29.29 180.76.76.76 114.114.114.114 { policy round_robin health_check 5s retry_failed 3 except upyun.local service.upyun } }

upyun.local.txt

$TTL 10 @ IN SOA ns1.upyun.local. root.upyun.local. ( 2 ;serial 300 ; refresh 1800 ; retry 10 ; expire 300 ; minimum ) ; used by haiyang.shao for apisix updc6 IN CNAME api ; used by shaohy for apisix gw1 api 10 IN A 192.168.5.91 api 10 IN A 192.168.5.92 api 10 IN A 192.168.5.93 api 10 IN A 192.168.5.94

DNS UDP负载均衡

upstream dns_load { server 192.168.13.229:253 weight=10 max_fails=5 fail_timeout=1s; server 192.168.13.230:253 weight=10 max_fails=5 fail_timeout=1s; server 192.168.13.231:253 weight=10 max_fails=5 fail_timeout=1s; server 192.168.144.1:253 weight=10 max_fails=5 fail_timeout=1s backup; server 192.168.144.2:253 weight=10 max_fails=5 fail_timeout=1s backup; server 192.168.144.3:253 weight=10 max_fails=5 fail_timeout=1s backup; } server { listen 192.168.21.20:53 udp; proxy_pass dns_load; proxy_timeout 30s; proxy_connect_timeout 30s; access_log logs/dns_access.log main; }

net.ipv4.tcpfintimeout=5

net.netfilter.nfconntrackmax=3000000

域名注册

★备案到期提醒

NTP 时间服务器

upstream ntpd_load { server ntp.aliyun.com:123 weight=5 max_fails=2 fail_timeout=5s; server ntp.ntsc.ac.cn:123 weight=5 max_fails=2 fail_timeout=5s; server asia.pool.ntp.org:123 weight=5 max_fails=2 fail_timeout=5s; server ntp1.aliyun.com:123 weight=5 max_fails=2 fail_timeout=5s backup; } server { listen 192.168.21.20:123 udp; proxy_pass ntpd_load; proxy_timeout 30s; proxy_connect_timeout 30s; }

APISIX的部署和使用

sudo yum install -y https://repos.apiseven.com/packages/centos/apache-apisix-repo-1.0-1.noarch.rpm yum install -y apisix apisix-dashboard apisix init_etcd apisix init systemctl start apisix systemctl enable apisix systemctl start apisix-dashboard systemctl enable apisix-dashboard

配置stream和http代理

apisix: enable_admin: true stream_proxy: # TCP/UDP proxy udp: # UDP proxy address list - 192.168.12.19:53 - 192.168.12.19:123 - 192.168.12.19:8600 discovery: consul: servers: - "http://192.168.13.229:8500" - "http://192.168.13.230:8500" - "http://192.168.13.231:8500" deployment: role: traditional role_traditional: config_provider: etcd etcd: use_grpc: true timeout: 3600 host: - "http://127.0.0.1:2379" prefix: "/apisix"

注册tcp/udp代理

curl http://127.0.0.1:9180/apisix/admin/stream_routes/2 -H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d ' { "server_addr": "192.168.12.19", "server_port": 123, "upstream": { "nodes": { "ntp.aliyun.com:123": 1, "ntp.ntsc.ac.cn:123": 1, "asia.pool.ntp.org:123": 1 }, "type": "roundrobin" } }' curl http://127.0.0.1:9180/apisix/admin/stream_routes/3 -H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d ' { "server_addr": "192.168.12.19", "server_port": 8600, "upstream": { "nodes": { "192.168.13.229:8600": 1, "192.168.13.230:8600": 1, "192.168.13.231:8600": 1, "192.168.13.232:8600": 1, }, "type": "roundrobin" } }'

TLS证书加密

CDN加速

操作系统优化和升级

高可用数据库与分布式

Ansible自动化部署K8S

K8S的技术优势

- 设计为可插入式系统,制定了CRI,CNI,CSI标准,但不限于具体的实现方式,方便模块化,可组合;

- 默认nodes之间的容器是互通的,在单体容器上抽象了pod(s),即使只有一个容器;

- 用声明式配置文件: 来提供自动部署,自动重启,自动复制,自动伸缩/扩展机制;

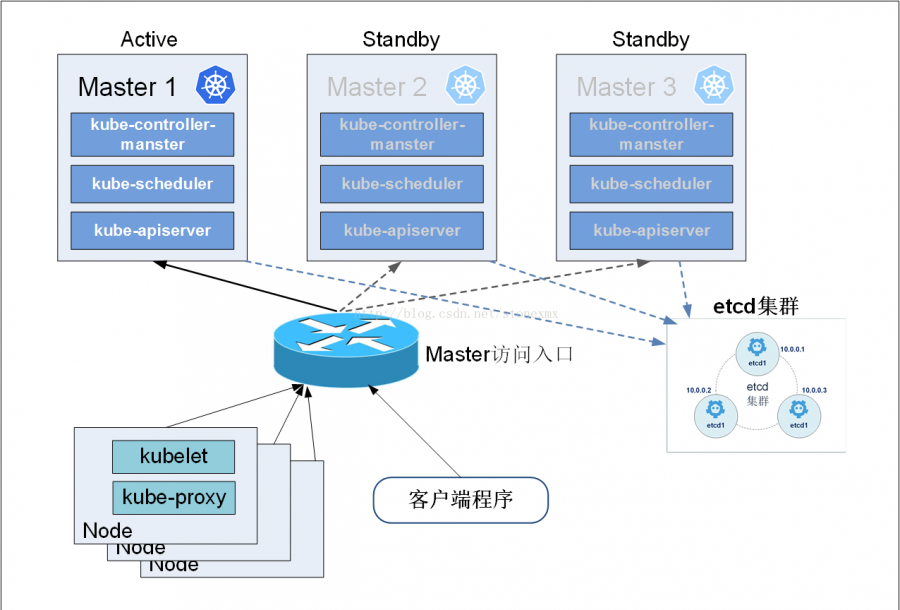

leader-elect=true自选举,竞争上岗

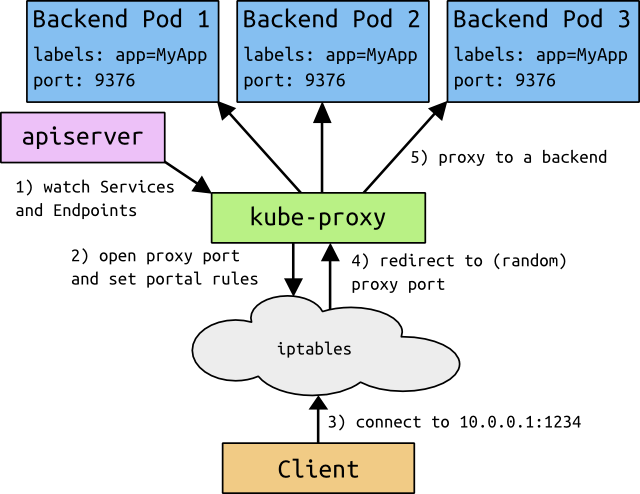

ServiceIP (iptables)

IPVS + IPSET

高可用Kubernets控制平面

- etcd + consul + master 共用3~5台

- kube-router与交换机用 ospf 或者 lvs

- kube-nodes就弹性扩展就好了

系统安装/优化/安全

sysctl.conf优化

#hash: v0.1.1-30b3ffd kernel.core_uses_pid=1 kernel.pid_max=4194303 kernel.ctrl-alt-del=1 kernel.msgmnb=65536 kernel.msgmax=65536 kernel.shmmni=4096 kernel.shmmax=8589934592 kernel.shmall=8589934592 kernel.sem=250 32000 100 128 # Increase number of incoming connections.max=65535 net.core.somaxconn=8192 net.core.rmem_default=131072 net.core.wmem_default=131072 net.core.rmem_max=33554432 net.core.wmem_max=33554432 net.core.dev_weight=512 net.core.optmem_max=262144 net.core.netdev_budget=1024 net.core.netdev_max_backlog=262144 net.ipv4.neigh.default.gc_thresh1=10240 net.ipv4.neigh.default.gc_thresh2=40960 net.ipv4.neigh.default.gc_thresh3=81920 # for lvs tunnel mode net.ipv4.conf.all.proxy_arp=0 # http://blog.clanzx.net/2013/10/30/arp-filter.html net.ipv4.conf.all.arp_announce=2 net.ipv4.conf.default.arp_announce=2 net.ipv4.conf.all.arp_ignore=1 net.ipv4.conf.default.arp_ignore=1 net.ipv4.conf.all.arp_filter=1 net.ipv4.conf.default.arp_filter=1 net.ipv4.conf.default.rp_filter=0 net.ipv4.conf.all.rp_filter=0 # https://mellowd.co.uk/ccie/?tag=pmtud # Warning,if MTU=9000 set 1, else 0 is good net.ipv4.tcp_mtu_probing=0 net.ipv4.ip_no_pmtu_disc=0 net.ipv4.tcp_slow_start_after_idle=0 # Do not cache metrics on closing connections net.ipv4.tcp_no_metrics_save=1 # Protect Against TCP Time-Wait net.ipv4.tcp_rfc1337=1 net.ipv4.conf.default.accept_source_route=0 net.ipv4.conf.default.accept_redirects=0 net.ipv4.conf.default.secure_redirects=0 net.ipv4.conf.all.accept_source_route=0 net.ipv4.conf.all.accept_redirects=0 net.ipv4.conf.all.secure_redirects=0 net.ipv4.ip_forward=1 net.ipv4.ip_local_port_range=9000 65535 net.ipv4.icmp_echo_ignore_broadcasts=1 net.ipv4.icmp_ignore_bogus_error_responses=1 net.ipv4.tcp_timestamps=1 net.ipv4.tcp_sack=1 net.ipv4.tcp_dsack=1 net.ipv4.tcp_window_scaling=1 net.ipv4.tcp_rmem=4096 102400 16777216 net.ipv4.tcp_wmem=4096 102400 16777216 #net.ipv4.tcp_mem=3145728 4194304 6291456 net.ipv4.tcp_mem=786432 1048576 1572864 net.ipv4.tcp_syncookies=1 net.ipv4.tcp_syn_retries=3 net.ipv4.tcp_synack_retries=5 net.ipv4.tcp_retries1=3 net.ipv4.tcp_retries2=15 net.ipv4.tcp_fin_timeout=30 net.ipv4.tcp_max_syn_backlog=262144 net.ipv4.tcp_max_orphans=262144 net.ipv4.tcp_frto=2 net.ipv4.tcp_thin_dupack=0 net.ipv4.tcp_reordering=3 net.ipv4.tcp_early_retrans=3 net.ipv4.tcp_tw_recycle=0 net.ipv4.tcp_tw_reuse=1 net.ipv4.tcp_keepalive_time=30 net.ipv4.tcp_keepalive_intvl=10 net.ipv4.tcp_keepalive_probes=3 net.ipv4.tcp_max_tw_buckets=600000 net.ipv4.tcp_congestion_control=bbr net.ipv4.netfilter.ip_conntrack_max=600000 net.ipv4.netfilter.ip_conntrack_tcp_timeout_close_wait=1 net.ipv4.netfilter.ip_conntrack_tcp_timeout_fin_wait=1 net.ipv4.netfilter.ip_conntrack_tcp_timeout_time_wait=1 net.ipv4.netfilter.ip_conntrack_tcp_timeout_established=15 net.netfilter.nf_conntrack_max=600000 net.netfilter.nf_conntrack_tcp_timeout_close_wait=1 net.netfilter.nf_conntrack_tcp_timeout_fin_wait=1 net.netfilter.nf_conntrack_tcp_timeout_time_wait=1 net.netfilter.nf_conntrack_tcp_timeout_established=15 vm.swappiness=0 vm.dirty_writeback_centisecs=9000 vm.dirty_expire_centisecs=18000 vm.dirty_background_ratio=5 vm.dirty_ratio=10 vm.overcommit_memory=1 vm.overcommit_ratio=50 vm.max_map_count=200000 fs.file-max=524288 fs.aio-max-nr=1048576

netfilter/iptables优化

禁用conntrack跟踪模块

blacklist iptable_nat blacklist nf_nat blacklist nf_conntrack blacklist nf_conntrack_ipv4 blacklist nf_defrag_ipv4

用iptables禁用跟踪

iptables -t raw -A PREROUTING -p ALL -j NOTRACK iptables -t raw -A OUTPUT -p ALL -j NOTRACK

关闭默认firewalld启动iptables并清除默认规则

systemctl stop firewalld systemctl disable firewalld systemctl start iptables systemctl enable iptables iptables -F service iptables save [root@k8s-master ~]# firewall-cmd --permanent --add-port=6443/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=2379-2380/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10250/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10251/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10252/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10255/tcp [root@k8s-master ~]# firewall-cmd --reload [root@k8s-master ~]# modprobe br_netfilter [root@k8s-master ~]# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables

分布式存储Ceph

分布式存储MinIO

容器准备Mesos/K8S

Docker切换国内镜像下载源

修改Docker配置文件/etc/default/docker如下:

DOCKER_OPTS="--registry-mirror=http://aad0405c.m.daocloud.io"

修改Docker.service允许FORWARD为ACCEPT

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

🏆Docker好用的镜像收集

etcd 集群部署

# 编辑配置文件 vim /etc/etcd/etcd.conf # 样例配置如下 # 节点名称 ETCD_NAME=etcd0 # 数据存放位置 ETCD_DATA_DIR="/var/lib/etcd/etcd0" # 监听其他 Etcd 实例的地址 ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380" # 监听客户端地址 ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001" # 通知其他 Etcd 实例地址 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.1.154:2380" # 初始化集群内节点地址 ETCD_INITIAL_CLUSTER="etcd0=http://192.168.1.154:2380,etcd1=http://192.168.1.156:2380,etcd2=http://192.168.1.249:2380" # 初始化集群状态,new 表示新建 ETCD_INITIAL_CLUSTER_STATE="new" # 初始化集群 token ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster" # 通知 客户端地址 ETCD_ADVERTISE_CLIENT_URLS="http://192.168.1.154:2379,http://192.168.1.154:4001"

集群搭建好后,在任意节点执行

etcdctl member list 可列所有集群节点信息 etcdctl cluster-health 检查集群健康状态

很有可能是查询的时候需要指定证书

etcdctl --ca-file /etc/kubernetes/ssl/ca.pem --endpoints "https://127.0.0.1:2379" cluster-health

etcd TLS 加密

原因是:ETCDCLUSTERNODE_LIST中的ip必须在生成etcd TLS证书时在etcd-csr.json中的hosts字段中指定(Subject Alternative Name(SAN)),否则可能会得到这样的错误。

etcd 备份和恢复

No help topic for 'snapshot'

ETCDCTL_API=3 etcdctl --endpoints=https://192.168.13.137:2379 snapshot save etcd_2022_11_18_10.db

Error: context deadline exceeded

ETCDCTL_API=3 etcdctl --endpoints=https://192.168.13.137:2379 snapshot save etcd_2022_11_18_10.db \ --cacert /etc/kubernetes/ssl/ca.pem \ --cert /etc/kubernetes/ssl/etcd.pem \ --key /etc/kubernetes/ssl/etcd-key.pem

恢复操作

ETCDCTL_API=3 etcdctl snapshot restore snapshot.db --name m3 --data-dir=/home/etcd_data

- 每个节点成员都应该使用相同的快照恢复

- 恢复后的文件需要修改权限为 etcd:etcd

- –name:重新指定一个数据目录,可以不指定,默认为 default.etcd

- –data-dir:必须指定数据目录,并将 data-dir 对应于 etcd 服务中配置的 data-dir

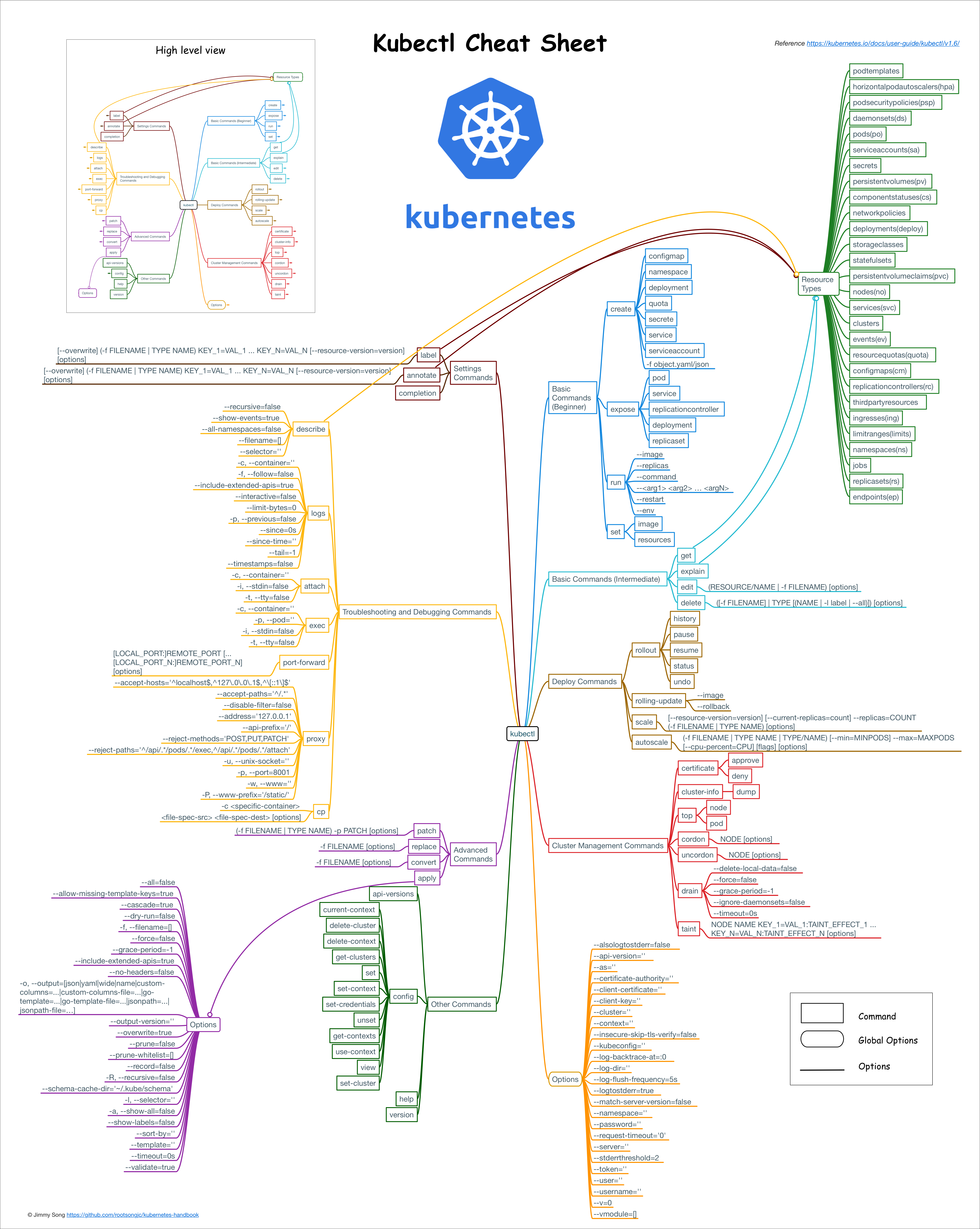

kubectl常用命令

HTTP2加速

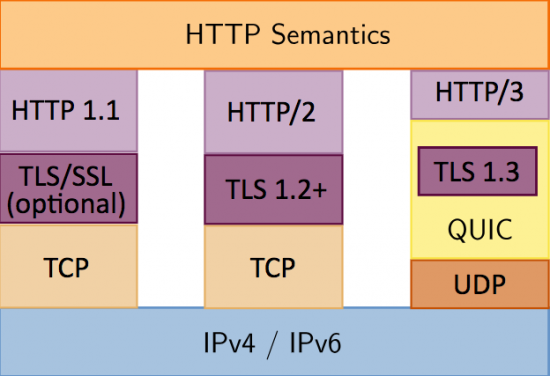

HTTP3/QUIC加速

- HTTP/1.1 有两个主要的缺点:安全不足和性能不高。

- HTTP/2 完全兼容 HTTP/1,头部压缩、多路复用等技术可以充分利用带宽,降低延迟,从而大幅度提高上网体验。但当出现了丢包时,HTTP/2 的表现反倒不如 HTTP/1 了。

- QUIC (Quick UDP Internet Connections) 是HTTP/3中的底层支撑协议。该协议基于UDP,又汲取了TCP中的精华,实现了既快又可靠的协议。

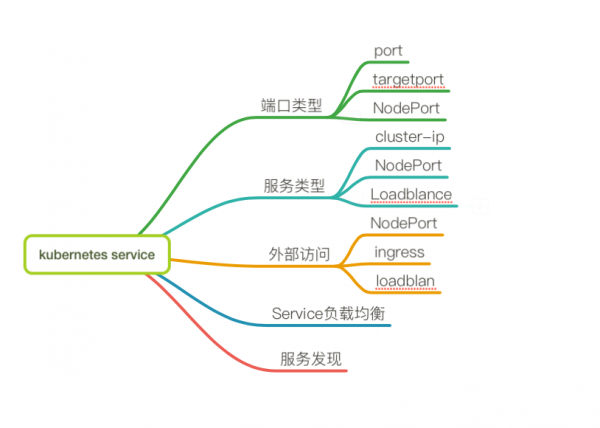

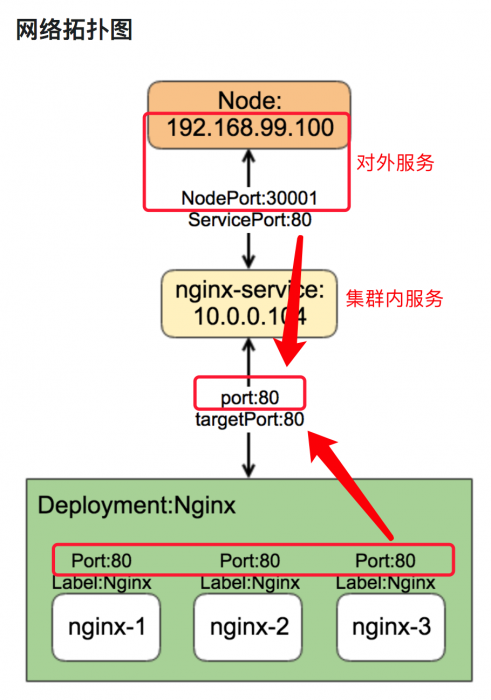

nodePort,Port,targetPort之间的区别

- port:在service上,负责集群中服务之间对内的互相访问,clusterIP:port

- NodePort:在node上,负责应用集群对外通信,NodeIP:NodePort

- targetPort:在container容器上,用于被pod绑定

这两个一般用在应用yaml描述文件中,起到的作用类似于docker -p选项

- containerport: 容器需要暴露的端口

- hostport: 容器暴露的端口映射到的主机端口。

这两个一般用在service中,service 的类型为cluster ip时候:

- port: service中clusterip 对应的端口

- targetport: clusterIP作为负载均衡, 后端目标实例(容器)的端口。

这一个一般用在service中,service的类型为nodeport:

- nodeport会在每个kubelet节点的宿主机开启一个端口,用于应用集群外部访问。

targetPort在pod上、负责与kube-proxy代理的port和Nodeport数据进行通信

🏆EasyKubernetes自动化部署

自动化部署的几种方式

- 🏆 kubeadm❤︎

准备ansible资源清单

[K8S] k8s-m1 ansible_ssh_port=65422 ansible_ssh_host=192.168.13.134 ETCD="yes" K8S="both" k8s-n2 ansible_ssh_port=65422 ansible_ssh_host=192.168.13.137 ETCD="yes" K8S="slave" k8s-n3 ansible_ssh_port=65422 ansible_ssh_host=192.168.13.140 ETCD="yes" K8S="slave"

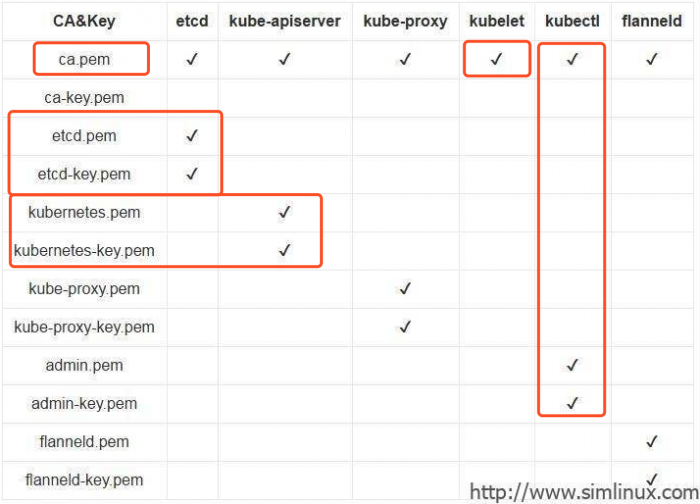

生成ssl证书

- ca.pem, ca-key.pem

- etcd.pem, etcd-key.pem

- admin.pem, admin-key.pem

- k8s.pem(kube-apiserver) k8s-key.pem

- kube-controller.pem, kube-scheduler-key.pem

- kube-scheduler.pem, kube-controller-key.pem

- kube-proxy.pem, kube-proxy-key.pem

打包并解压

K8S_SSL_Repo="http://devops.upyun.com/upyun.k8s.pem.tgz" tar zcvf upyun.k8s.pem.tgz etc/* ; tar zxvf upyun.k8s.pem.tgz -C /

下载K8S对应的二进制版本

下载回来的二进制放在upyun.k8s.bin.tgz下重新打包

K8S_BIN_Repo="http://devops.upyun.com/upyun.k8s.bin.1.23.tgz"

初始化系统和docker环境

ansible-playbook -i lists_k8s main.yml -e "host=all" -t init

全新部署 etcd集群

ansible-playbook -i lists_k8s main.yml -e "host=all" -t etcd

全新部署kubernetes

ansible-playbook -i lists_k8s main.yml -e "host=all" -t k8s-bin,kube-router

检查kube-apiserver

systemctl status kube-apiserver -l journalctl -xeu kube-apiserver grep "kube-apiserver: E" /var/log/messages

生成所需要的 *.kubeconfig

更名kubernetes配置重启组件

- controller-manager.kubeconfig 重启

- kube-scheduler.kubeconfig 重启

- bootstrap.kubeconfig 重启

配置kubectl子命令补全

yum install -y bash-completion kubectl completion bash > ~/.kube/completion.bash.inc source ~/.kube/completion.bash.inc source ~/.bash_profile

检查所有组件的状态

kubectl get componentstatuses kubectl describe nodes k8s-m1

检查csr并手动审批

kubectl get csr kubectl certificate approve node-csr-xxxx

- 如果您设置例如 cert-manager 等外部签名者,则会自动批准证书签名请求(CSRs)

- 否者,您必须使用 kubectl certificate 命令手动批准证书。

部署kube-router并检查所有节点状态

kubectl apply -f generic-kuberouter-all-features.yaml kubectl get nodes -o wide --all-namespaces

部署coredns容器内dns

kubectl apply -f coredns.yaml

K8S网络准备工作

Docker 网络通信

利用 Net Namespace 可以为 Docker 容器创建隔离的网络环境,容器具有完全独立的网络栈,与宿主机隔离。

我们在使用docker run创建 Docker 容器时,可以使用–network=选项指定容器的网络模式,Docker 有以下 4 种网络模式:

容器网络模型

交换机堆叠

交换机OSPF

- LVS+OSPF 架构 OSPF 适用于有上行负载均衡的应用场景

K8S加密通讯部署

Kubernets各组件依赖的证书列表

授权kubelet-bootstrap角色

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --group=system:bootstrappers kubectl create clusterrolebinding kubelet-nodes \ --clusterrole=system:node \ --group=system:nodes

kubectl describe clusterrole system:kube-controller-manager kubectl delete clusterrole system:kube-controller-manager

部署kube-controller-manager加密

创建 kube-controller-manager 证书和私钥

mkcontroller(){ cat > kube-controller-csr.json <<EOF { "CN": "kubernetes", "hosts": [ EOF echo -en $HOST >> kube-controller-csr.json cat >> kube-controller-csr.json <<EOF "192.168.0.0/16", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "ZJ", "L": "HZ", "O": "system:kube-controller-manager", "OU": "$OU" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-csr.json | cfssljson -bare kube-controller }

创建和分发 kubeconfig 文件

# kube-controller-manager kubeconfig # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=controller-manager.kubeconfig.${node_name} # 设置客户端认证参数 kubectl config set-credentials system:kube-controller-manager \ --client-certificate=/etc/kubernetes/ssl/kube-controller.pem \ --client-key=/etc/kubernetes/ssl/kube-controller-key.pem \ --embed-certs=true \ --kubeconfig=controller-manager.kubeconfig.${node_name} # 设置上下文参数 kubectl config set-context system:kube-controller-manager \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=controller-manager.kubeconfig.${node_name} # 设置默认上下文 kubectl config use-context system:kube-controller-manager --kubeconfig=controller-manager.kubeconfig.${node_name}

创建 kube-controller-manager systemd unit 模板文件

K8S高级之监控系统

Kubernetes 集群的监控方案目前主要有以下几种方案:

- Heapster: 是一个集群范围的监控和数据聚合工具,以 Pod 的形式运行在集群中。 heapster 除了 Kubelet/cAdvisor 之外,我们还可以向 Heapster 添加其他指标源数据,比如 kube-state-metrics,需要注意的是 Heapster 已经被废弃了,后续版本中会使用 metrics-server 代替。

- cAdvisor:是 Google 开源的容器资源监控和性能分析工具,它是专门为容器而生,本身也支持 Docker 容器。

- kube-state-metrics:通过监听 API Server 生成有关资源对象的状态指标,比如 Deployment、Node、Pod,需要注意的是 kube-state-metrics 只是简单提供一个 metrics 数据,并不会存储这些指标数据,所以我们可以使用 Prometheus 来抓取这些数据然后存储。

- metrics-server:也是一个集群范围内的资源数据聚合工具,是 Heapster 的替代品,同样的,metrics-server 也只是显示数据,并不提供数据存储服务。在1.7版本及之后,k8s将cAdvisor精简化内置于kubelet中,因此Metrics-Server可直接从kubelet中获取监控数据。

- kube-state-metrics 主要关注的是业务相关的一些元数据,比如 Deployment、Pod、副本状态等

- metrics-server 主要关注的是资源度量 API 的实现,比如 CPU、文件描述符、内存、请求延时等指标。

监控对象包括:

- 交换机和服务器的硬件设备(基于snmp) → Zabbix4.2新功能实践-集成Prometheus

- 服务器基本指标(cpu,memory,disk,netcard,irq,ioutils)→ prometheus

- 容器指标(资源隔离参数、资源使用和网络统计数据完整历史状况) → cAdvisor

比较合适的架构:

#kubelet cAdvisor 默认在所有接口监听 4194 端口的请求, 以下iptables限制内网访问 ExecStartPost=/sbin/iptables -A INPUT -s 172.30.0.0/16 -p tcp --dport 4194 -j ACCEPT ExecStartPost=/sbin/iptables -A INPUT -s 172.16.0.0/16 -p tcp --dport 4194 -j ACCEPT ExecStartPost=/sbin/iptables -A INPUT -s 192.168.0.0/16 -p tcp --dport 4194 -j ACCEPT ExecStartPost=/sbin/iptables -A INPUT -p tcp --dport 4194 -j DROP

prometheus的特点

和其他监控系统相比,Prometheus的特点包括:

- 多维数据模型(时序列数据由metric名和一组key/value组成)

- 在多维度上灵活的查询语言(PromQl)

- 不依赖分布式存储,单主节点工作.

- 通过基于HTTP的pull方式采集时序数据

- 可以通过中间网关进行时序列数据推送(pushing)

- 目标服务器可以通过发现服务或者静态配置实现

- 多种可视化和仪表盘支持

prometheus相关组件

Prometheus生态系统由多个组件组成,其中许多是可选的:

- Prometheus 主服务,使用 pull方式来抓取和存储时序数据

- client library 用来构造应用或 exporter 代码 (go,java,python,ruby)

- 对于定时任务这种短周期的指标采集,如果采用 Pull 模式,可能造成任务结束了,Prometheus 还没有来得及采集,这个时候可以使用加一个中转层,客户端推数据到Gateway缓存一下,由 Prometheus 从 Push Gateway Pull 指标过来。(需要额外搭建 Push Gateway,同时需要新增 job 去从 Gateway 采数据)

- 可视化的dashboard (两种选择,promdash 和 grafana.目前主流选择是 grafana.)

- 一些特殊需求的数据出口(用于HAProxy, StatsD, Graphite等服务)

- 可定制插件式的报警管理端(alartmanager,单独进行报警汇总,分发,屏蔽等 )

Prometheus安装

wget https://github.com/prometheus/prometheus/releases/download/v2.9.2/prometheus-2.9.2.linux-amd64.tar.gz tar zxvf prometheus-2.9.2.linux-amd64.tar.gz mv prometheus-2.9.2.linux-amd64 /opt/prometheus useradd -m -d /var/lib/prometheus -s /sbin/nologin prometheus chown -R prometheus:prometheus /opt/prometheus

vi /etc/profile.d/prometheus.sh

export PATH=$PATH:/opt/prometheus

vi /etc/systemd/system/prometheus.service

[Unit] Description=Prometheus Server Documentation=https://prometheus.io/docs/introduction/overview/ After=network-online.target [Service] User=prometheus Group=prometheus Restart=on-failure ExecStart=/opt/prometheus/prometheus \ --config.file=/opt/prometheus/prometheus.yml \ --storage.tsdb.path=/opt/prometheus/data \ --storage.tsdb.retention.time=30d \ --enable-feature=remote-write-receiver [Install] WantedBy=multi-user.target

机器安装:各种exporter,也可以自定义监控指标key

- node-exporter是Prometheus官方推荐的exporter

- HAProxy exporter

- Collectd exporter

- SNMP exporter

- MySQL server exporter

- ….

Prometheus热加载

1. kill -HUP pid 2. curl -X POST http://localhost:9090/-/reload

prometheus官方提供了一个非常贴心的工具,prometool 可以检查配置文件是否正确,用法:(其他用法自行开发)

./promtool check config prometheus.yml

node_exporter安装

docker安装node_exporter

docker run -tid --restart=always --name=node_exporter \ --net="host" \ --pid="host" \ -v "/:/host:ro,rslave" \ quay.io/prometheus/node-exporter \ --path.rootfs /host

/etc/systemd/system/node_exporter.service

[Unit] Description=Node_exporter DefaultDependencies=no [Service] Type=simple User=node_exporter RemainAfterExit=yes ExecStart=/opt/node_exporter/node_exporter --collector.textfile.directory=/data/node_exporter/ --web.listen-address=:9100 #根据实际路径修改 Restart=on-failure [Install] WantedBy=multi-user.target

/etc/supervisor.d/node_exporter.conf

[program:node_exporter] command = /opt/node_exporter/node_exporter autostart = true autorestart = true stdout_logfile = NONE stderr_logfile = NONE

prometheus.yml添加node_exporter监控项

- job_name: 'node-exporter' static_configs: - targets: ['192.168.21.20:9100'] labels: instance: vpn

ceph_exporter安装

docker run -tid --restart=always -v /etc/ceph:/etc/ceph -p=9128:9128 digitalocean/ceph_exporter

prometheus.yml添加ceph_exporter监控项

- job_name: 'ceph-exporter' static_configs: - targets: ['192.168.146.11:9128','192.168.146.12:9128','192.168.146.13:9128','192.168.146.14:9128'] labels: instance: ceph

snmp_exporter安装

snmp_exporter 默认的snmp版本为1,并且没有指定 Community。

重新编译snmp.yml文件

yum install -y gcc gcc-g++ make yum install -y net-snmp net-snmp-utils net-snmp-libs net-snmp-devel go get github.com/prometheus/snmp_exporter/generator cd ${GOPATH-$HOME/go}/src/github.com/prometheus/snmp_exporter/generator go build make mibs

编写generator.yml加入

version: 2 # SNMP version to use. Defaults to 2. auth: # Community string is used with SNMP v1 and v2. Defaults to "public". community: public

export MIBDIRS=mibs ./generator generate

/etc/systemd/system/snmp_exporter.service

[Unit] Description=prometheus After=network.target [Service] Type=simple User=prometheus ExecStart=/opt/snmp_exporter/snmp_exporter --config.file=/opt/snmp_exporter/snmp.yml Restart=on-failure [Install] WantedBy=multi-user.target

测试snmp_exporter转发功能

curl 'http://安装snmp_exporter的机器的IP:9116/snmp?target=被监控交换机IP'

prometheus.yml添加snmp监控项

- job_name: 'snmp' scrape_interval: 120s scrape_timeout: 120s static_configs: - targets: - 172.16.1.5 # 添加需要被监控交换机的ip labels: instance: h3c location: SAD metrics_path: /snmp params: module: [if_mib] #指定使用snmp_exporter程序的 if_mib 模块 relabel_configs: - source_labels: [__address__] target_label: __param_target - source_labels: [__param_target] target_label: instance - target_label: __address__ replacement: 192.168.5.76:9116 #指定snmp_exporter监控节点的代理服务器ip

mysql_exporter安装

数据库用户授权

GRANT REPLICATION CLIENT, PROCESS ON *.* TO 'exporter'@'127.0.0.1' identified by 'upyun.com123'; GRANT SELECT ON performance_schema.* TO 'exporter'@'127.0.0.1';

使用docker方式运行

docker pull prom/mysqld-exporter docker run -d \ --net="host" \ -e DATA_SOURCE_NAME="exporter:upyun.com123@(127.0.0.1:3306)/" \ prom/mysqld-exporter

prometheus.yml添加mysql监控项

- job_name: 'mysql-exporter' static_configs: - targets: ['192.168.144.109:9104'] labels: instance: mysql

可以先用命令测试一下

curl http://192.168.144.109:9104/metrics

Grafana数据可视化

Grafana Dashboard 有着非常漂亮的图表和布局展示,功能齐全的度量仪表盘和图形编辑器,支持Graphite、zabbix、InfluxDB、Prometheus和OpenTSDB作为数据源。

Grafana主要特性:

- 灵活丰富的图形化选项;

- 可以混合多种风格;

- 支持白天和夜间模式;

- 多个数据源;

Grafana安装

wget https://dl.grafana.com/oss/release/grafana-6.1.6.linux-amd64.tar.gz tar zxvf grafana-6.1.6.linux-amd64.tar.gz mv grafana-6.1.6 /opt/grafana

/etc/systemd/system/grafana.service

groupadd grafana useradd -g grafana -m -d /var/lib/grafana -s /sbin/nologin grafana

/etc/sysconfig/grafana-server

GRAFANA_USER=grafana GRAFANA_GROUP=grafana GRAFANA_HOME=/opt/grafana LOG_DIR=/var/log DATA_DIR=/var/lib/grafana MAX_OPEN_FILES=10000 CONF_DIR=/opt/grafana CONF_FILE=/opt/grafana/conf/grafana.ini RESTART_ON_UPGRADE=true PLUGINS_DIR=/var/lib/grafana/plugins PROVISIONING_CFG_DIR=/opt/grafana/conf/provisioning # Only used on systemd systems PID_FILE_DIR=/var/lib/grafana

/etc/systemd/system/grafana.service

ExecStart=/opt/grafana/bin/grafana-server \ --homepath /opt/grafana \ --config=${CONF_FILE} \ --pidfile=${PID_FILE_DIR}/grafana-server.pid \ cfg:default.paths.logs=${LOG_DIR} \ cfg:default.paths.data=${DATA_DIR} \ cfg:default.paths.plugins=${PLUGINS_DIR} \ cfg:default.paths.provisioning=${PROVISIONING_CFG_DIR}

Grafana安装插件

如缺少插件则安装 饼图面板插件

for i in grafana-piechart-panel snuids-trafficlights-panel;do grafana-cli plugins install $i done vi /opt/grafana/conf/grafana.ini ... plugins = /data/grafana/plugins systemctl restart grafana

Grafana报警配置

Grafana从4.0开始新增预警功能,下面简单介绍一下。Grafana告警设置分为两部分:

- Notification配置

- Alert配置

Notification配置:

其实就是配置告警途径,Grafana支持多种方式的告警:Email、webhook等。由于webhook比较通用,我们重点说下如何配置webhook。

Alert配置:

alert config页面设置报警阈值,和报警条件等。

alertmanager报警器

在某些情况下除了Alertmanager已经内置的集中告警通知方式以外,对于不同的用户和组织而言还需要一些自定义的告知方式支持。通过Alertmanager提供的webhook支持可以轻松实现这一类的扩展。除了用于支持额外的通知方式,webhook还可以与其他第三方系统集成实现运维自动化,或者弹性伸缩等。

wget https://github.com/prometheus/alertmanager/releases/download/v0.17.0/alertmanager-0.17.0.linux-amd64.tar.gz tar zxvf alertmanager-0.17.0.linux-amd64.tar.gz mv alertmanager-0.17.0.linux-amd64 /opt/alertmanager

vi alertmanager.yml

global: resolve_timeout: 5m route: receiver: webhook group_wait: 30s group_interval: 5m repeat_interval: 4h group_by: [alertname] routes: - receiver: webhook group_wait: 10s match: team: node receivers: - name: webhook webhook_configs: - url: http://localhost:8060/dingtalk/webhook1/send send_resolved: true

vi /etc/systemd/system/alertmanager.service

[Unit] Description=Alertmanager After=network.target [Service] Type=simple User=prometheus ExecStart=/opt/alertmanager/alertmanager --config.file=/opt/alertmanager/alertmanager.yml --storage.path=/data/alertmanager Restart=on-failure [Install] WantedBy=multi-user.target

alertmanager报警规则

Prometheus除了存储数据外,还提供了一种强大的功能表达式语言PromQL,允许用户实时选择和汇聚时间序列数据。

表达式的结果可以在浏览器中显示为图形,也可以显示为表格数据,或者由外部系统通过 HTTP API 调用。通过PromQL用户可以非常方便地查询监控数据,或者利用表达式进行告警配置

alertmanager集成钉钉

钉钉自定义“新内测报警”

13396815591/GeminisXXX https://oapi.dingtalk.com/robot/send?access_token=0f0b2e4b29ccedbfa6ef2b609dbbc1dbda3aa074c4f198337acad55119f3cba5

用docker方式安装prometheus-webhook-dingtal

docker pull timonwong/prometheus-webhook-dingtalk docker run -tid --restart=always --name dingtalk -p 8060:8060 timonwong/prometheus-webhook-dingtalk --ding.profile="webhook1=https://oapi.dingtalk.com/robot/send?access_token=token1"

但 webhook1= 这个名称要跟alertmanager配置中的名称一致

K8S高级之容器网络框架

ifconfig bond0 mtu 9000 up ip link set dev bond0 mtu 9000

注意: 网络参数MTU在容器云跨机房互通上要协商一致(/sys/class/net/ethX/mtu),如 9000就需要设备一致.

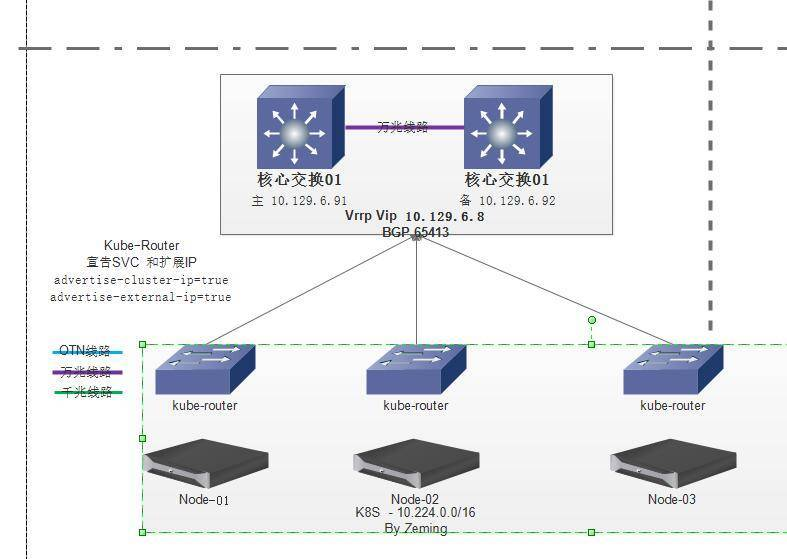

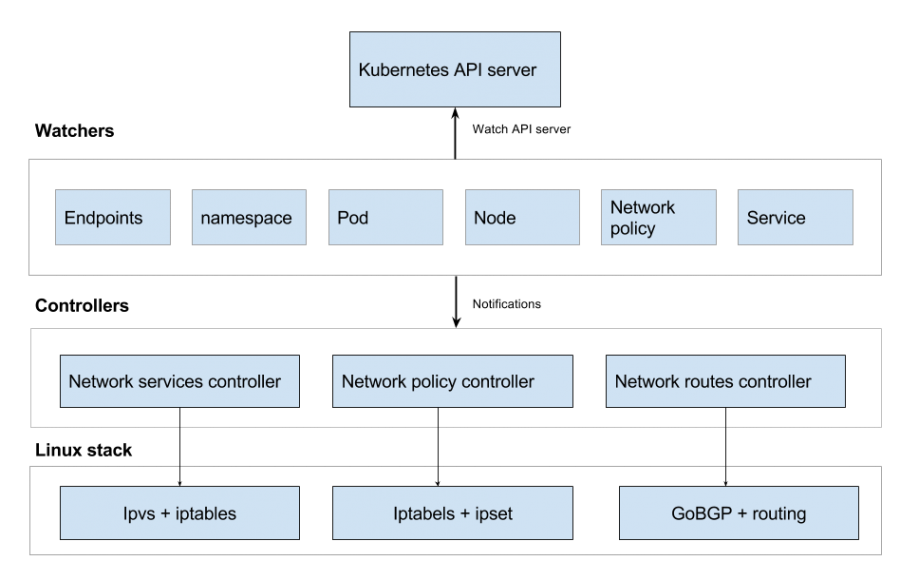

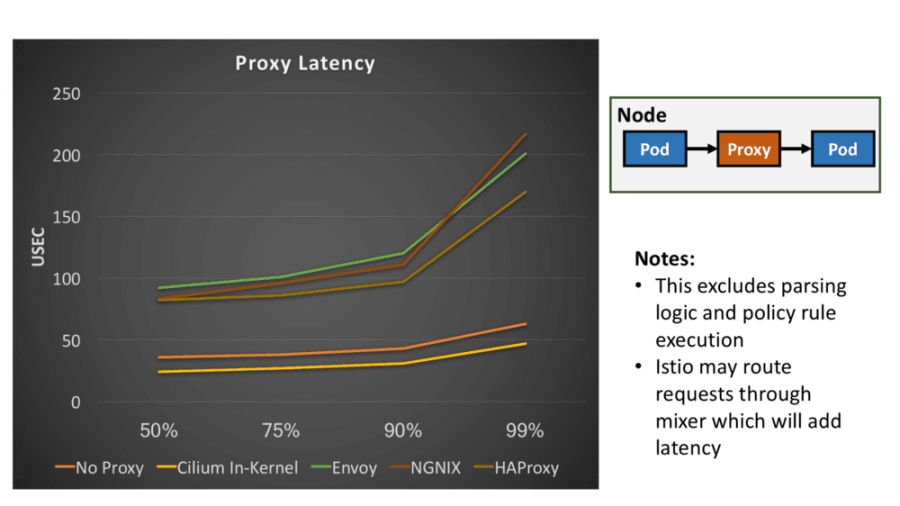

Kube-router基于BGP和IPVS的网络框架

主要改进

- 替代了kube-proxy组件,无需再部署kube-proxy了,解决了svc网络,集成police网络策略,网络层控制增强

- 自带cni,使用BGP组网,更易发布和扩展对接,之前方案要使用caclio其他方案

- 基于ipvs转发,替代iptables方案,具备负载均衡如rr/sip/hash/ip会话保持,性能更好

- 路由传播依赖bgp

负载均衡调度算法

Kube-router使用LVS作为服务代理。 LVS支持丰富的调度算法。您可以为该服务添加注释以选择一个调度算法。

当一个服务没有注释时,默认情况下选择“轮询”调度策略

For least connection scheduling use: kubectl annotate service my-service "kube-router.io/service.scheduler=lc" For round-robin scheduling use: kubectl annotate service my-service "kube-router.io/service.scheduler=rr" For source hashing scheduling use: kubectl annotate service my-service "kube-router.io/service.scheduler=sh" For destination hashing scheduling use: kubectl annotate service my-service "kube-router.io/service.scheduler=dh"

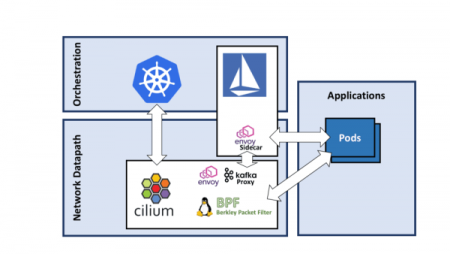

Cilium基于eBPF和XDP的高性能网络框架

K8S高级之configmap和secret

redis-cli --cluster create --cluster-replicas 1 \ 10.109.218.140:6379 10.100.194.81:6379 \ 10.105.204.209:6379 10.97.40.13:6379 \ 10.108.48.154:6379 10.105.130.101:6379 \ 10.96.33.238:6379 10.97.154.41:6379 redis-cli -c -h 10.109.218.140 -p 6379 cluster info

Secret介绍

Secret解决了密码、token、密钥等敏感数据的配置问题,而不需要把这些敏感数据暴露到镜像或者Pod Spec中。Secret可以以Volume或者环境变量的方式使用。

Secret类型

目前Secret的类型有3种:

- Opaque(default):任意字符串,base64编码格式的Secret,用来存储密码、密钥等;

- kubernetes.io/service-account-token:作用于ServiceAccount,就是kubernetes的Service Account中所说的。 即用来访问Kubernetes API,由Kubernetes自动创建,并且会自动挂载到Pod的/run/secrets/kubernetes.io/serviceaccount目录中;

- kubernetes.io/dockercfg: 作用于Docker registry,用户下载docker镜像认证使用。用来存储私有docker registry的认证信息。

Opaque Secret类型

#Opaque类型的数据是一个map类型,要求value是base64编码格式: ##创建admin账户 echo -n "admin" | base64 YWRtaW4= echo -n "1f2d1e2e67df" | base64 MWYyZDFlMmU2N2Rm

ceph-config.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-web provisioner: kubernetes.io/rbd parameters: monitors: 192.168.146.11,192.168.146.12,192.168.146.13,192.168.146.14 adminId: admin adminSecretName: ceph-secret adminSecretNamespace: lnmp pool: rbd userId: admin userSecretName: ceph-secret fsType: xfs imageFormat: "1" imageFeatures: "layering" --- apiVersion: v1 kind: Secret metadata: name: ceph-secret namespace: lnmp type: "kubernetes.io/rbd" data: # 这个就是 ceph.client.admin.keyring中的key做 base64加密 key: QVFESUQzTmEyeFcrTnhBQXlPdlYxZ0NwWHFqR0tlR29wdDYxNkE9PQ==

nginx-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config-vol

namespace: lnmp

labels:

app: nginx

data:

default.conf: |

server {

listen 80;

server_name "";

root /usr/html;

index index.php index.html index.htm;

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

location ~.*.(js|css|html|png|jpg|gif|mp4|svg|apk|pbk)$ {

expires 7d;

}

location ~ \.php$ {

fastcgi_pass php-svc:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

}

}

nginx-deployment.yaml

apiVersion: apps/v1beta1 # for versions before 1.8.0 use apps/v1beta1 kind: Deployment metadata: name: nginx-deployment namespace: lnmp labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: docker.io/smebberson/alpine-nginx ports: - containerPort: 80 volumeMounts: - name: nfs-pv mountPath: /usr/html - name: config mountPath: /etc/nginx/conf.d volumes: # 宿主机上的目录 - name: nfs-pv nfs: path: /mnt/nfs server: 192.168.13.142 - name: config configMap: name: nginx-config-vol --- apiVersion: v1 kind: Service metadata: name: nginx-svc namespace: lnmp labels: app: nginx spec: type: NodePort selector: app: nginx ports: - port: 80 targetPort: 80 nodePort: 30080

mysql-secret.yaml

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: mysql-secrets

namespace: lnmp

labels:

app: mysql

data:

#root

root-password: dXB5dW4xMjM=

#upyun123

root-user: cm9vdA==

mysql-deployment.yaml

apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: mysql namespace: lnmp spec: serviceName: mysql-svc replicas: 3 template: metadata: labels: app: mysql spec: initContainers: - name: copy-mariadb-config image: busybox command: ['sh', '-c', 'cp /configmap/* /etc/mysql/conf.d'] volumeMounts: - name: configmap mountPath: /configmap - name: config mountPath: /etc/mysql/conf.d containers: - name: mysql image: ausov/k8s-mariadb-cluster ports: - containerPort: 3306 name: mysql - containerPort: 4444 name: sst - containerPort: 4567 name: replication - containerPort: 4568 name: ist env: - name: GALERA_SERVICE value: "mysql-svc" - name: POD_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysql-secrets key: root-password - name: MYSQL_ROOT_USER valueFrom: secretKeyRef: name: mysql-secrets key: root-user - name: MYSQL_INITDB_SKIP_TZINFO value: "yes" readinessProbe: exec: command: ["bash", "-c", "mysql -uroot -p\"${MYSQL_ROOT_PASSWORD}\" -e 'show databases;'"] initialDelaySeconds: 20 timeoutSeconds: 5 volumeMounts: - name: config mountPath: /etc/mysql/conf.d - name: datadir mountPath: /var/lib/mysql volumes: - name: config emptyDir: {} - name: configmap configMap: name: mysql-config-vol items: - path: "galera.cnf" key: galera.cnf - path: "mariadb.cnf" key: mariadb.cnf volumeClaimTemplates: - metadata: name: datadir annotations: volume.beta.kubernetes.io/storage-class: "ceph-web" spec: accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 1Gi --- apiVersion: v1 kind: Service metadata: annotations: service.alpha.kubernetes.io/tolerate-unready-endpoints: "true" name: mysql-svc namespace: lnmp labels: app: mysql tier: data spec: selector: app: mysql ports: - port: 3306 targetPort: 3306 name: mysql clusterIP: None

K8S高级之YAML服务编写

参考资料

Kubernetes RBAC控制

参考资料

# kube-apiserver : --authorization-mode=RBAC kubectl create clusterrolebinding kubelet-node-clusterbinding --clusterrole=system:node --user=system:node:k8s-m1 kubectl get clusterrolebindings -o wide --all-namespaces

K8S高级之容器日志收集分析

参考资料

K8S高级之分布式埋点追踪

参考资料

分布式追踪系统发展很快,种类繁多,但核心步骤一般有三个:代码埋点,数据存储、查询展示。

K8S高级之持久化存储

参考资料

Ceph & MySQL

yum install -y ceph-common # 每个节点都要安装

failed to create rbd image

rbd: extraneous parameter

获取pvc信息

kubectl get pvc --all-namespaces |awk '/pvc-/{print $4}' > xx #!/bin/sh while read LINE;do echo $LINE rbd info kubernetes-dynamic-$LINE done < xx

K8S高级之弹性感知+水平扩展

参考资料

K8S高级之服务注册发现/负载均衡

服务自动注册/检测

- consul

- etcd

服务自动发现

- consul

For non-RBAC deployments, you'll need to edit the resulting yaml before applying it:

服务负载均衡

将 Nginx 或 HAProxy 部署到 Kubernetes 可将 Nginx 或 HAProxy 作为 ReplicaSet 或 DaemonSet(指定 Nginx 或 HAProxy 容器映像)部署到 Kubernetes。 使用 ConfigMap 存储代理的配置文件,然后将 ConfigMap 装载为卷。 创建 LoadBalancer 类型的服务,以通过 Azure 负载均衡器公开网关。

另外,请考虑在群集中的一组专用节点上运行网关。 这种做法的好处包括:

K8S高级之CRD构建服务

Operator模式

- 插拔特性

- 资源特性

🏆K8S生态之kubevela实战

K8应用部署计划

KubeVela 围绕着云原生应用交付和管理场景展开,背后的应用交付模型是 Open Application Model,简称 OAM 。

每一个应用部署计划都由四个部分组成,分别是组件制品、运维能力、部署策略和工作流。其格式如下:

helm安装及配置

helm repo add kubevela https://charts.kubevela.net/core helm repo update helm install --create-namespace -n vela-system kubevela kubevela/vela-core --wait --set multicluster.enabled=false # vela addon disable velaux # vela uninstall # helm uninstall -n vela-system kubevela # kubectl get crd |grep oam | awk '{print $1}' | xargs kubectl delete crd # rm -rf ~/.vela

参考这个资料:“Cluster Gateway”用于将 kubernetes api流量路由到多个kubernetes 集群,可以使用: –set multicluster.enabled=false禁用

Helm Chart CICD

交付第一个应用

# 此命令用于在管控集群创建命名空间 vela env init prod --namespace prod vela up -f https://kubevela.net/example/applications/first-app.yaml vela delete first-vela-app #正常情况下应用完成第一个目标部署后进入暂停状态(workflowSuspending)。 vela status first-vela-app #人工审核,批准应用进入第二个目标部署 vela workflow resume first-vela-app #通过下述方式来访问该应用 vela port-forward first-vela-app 8000:8000 curl http://127.0.0.1:8000 <pre> Hello KubeVela! Make shipping applications more enjoyable. , //, //// ./ /////* ,/// /////// .///// //////// /////// ///////// //////// ////////// ,///////// /////////// ,////////// ///////////. ./////////// //////////// //////////// ////////////. *//////////// ////////////* #@@@@@@@@@@@* ..,,***/ ///////////// /@@@@@@@@@@@# *@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@& .@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@. @@@@@@@@@@@@@@@@@@@@@@@@@@@@@ .&@@@* *@@@& ,@@@&. _ __ _ __ __ _ | |/ /_ _ | |__ ___\ \ / /___ | | __ _ | ' /| | | || '_ \ / _ \\ \ / // _ \| | / _` | | . \| |_| || |_) || __/ \ V /| __/| || (_| | |_|\_\\__,_||_.__/ \___| \_/ \___||_| \__,_|

first-app.yaml

apiVersion: core.oam.dev/v1beta1 kind: Application metadata: name: first-vela-app spec: components: - name: express-server type: webservice properties: image: oamdev/hello-world ports: - port: 8000 expose: true traits: - type: scaler properties: replicas: 1 policies: - name: target-default type: topology properties: # local 集群即 Kubevela 所在的集群 clusters: ["local"] namespace: "default" - name: target-prod type: topology properties: clusters: ["local"] # 此命名空间需要在应用部署前完成创建 namespace: "prod" - name: deploy-ha type: override properties: components: - type: webservice traits: - type: scaler properties: replicas: 2 workflow: steps: - name: deploy2default type: deploy properties: policies: ["target-default"] - name: manual-approval type: suspend - name: deploy2prod type: deploy properties: policies: ["target-prod", "deploy-ha"]

K8S高级之Knative函数服务

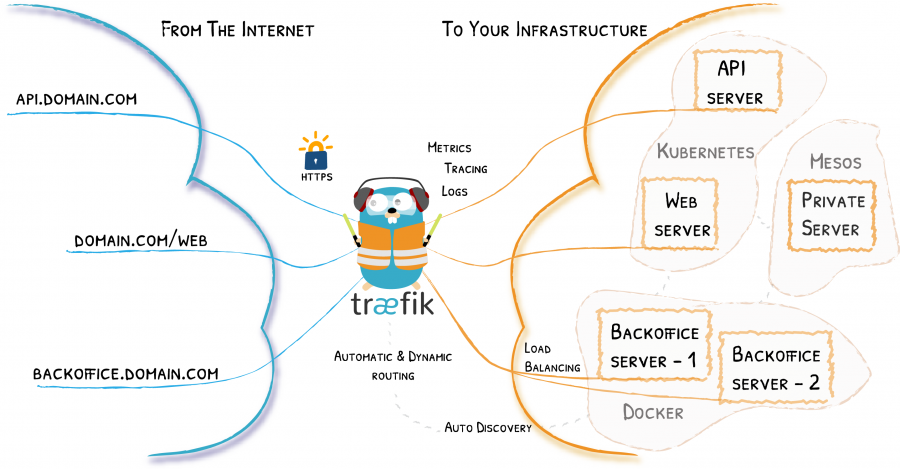

K8S高级之ServiceMesh - Maesh实践

Maesh与Kubernetes本机集成,可以使用Helm安装。Maesh需要Kubernetes 1.11或更高版本,因为它利用CoreDNS来运行Maesh端点,而不是标准Kubernetes服务端点。Maesh端点与服务并排运行,并且默认情况下是选择加入的。在将现有服务添加到网格之前,它们不会受到影响,从而可以将Maesh逐步集成到应用程序中。

Maesh功能集建立在Golang开源反向代理和负载均衡器Traefik之上,包括Traefik的核心组件。网络可观察性通过OpenTracing实现,OpenTracing是供应商中立的API规范和用于分布式跟踪的工具框架。诸如负载平衡,重试,断路器和速率限制之类的流量管理控件可以定义为Kubernetes服务上的注释。

Maesh还支持使用SMI(服务网格技术的标准接口)进行配置。当在SMI模式下配置Maesh时,将明确启用访问和路由。默认情况下,所有路由和访问都被拒绝。启用SMI 是安装服务网格时定义的Maesh 静态配置的一部分。

Maesh同时支持TCP和HTTP。在HTTP模式下,Maesh依靠Traefik在虚拟主机,路径,标头,cookie上启用路由。使用TCP模式可以与SNI路由支持集成。在单个群集中可以同时使用两种模式。

若要使用Maesh,请不要将服务引用为<servicename>.<namespace>,而是将服务引用为<servicename>.<namespace> .maesh。 这些引用将通过Maesh服务网格访问和路由请求。通过这种方法,一些服务可以通过服务网格运行,而其他服务则不需要。

Maesh rbac角色创建

apiVersion: v1 kind: ServiceAccount metadata: name: tiller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: tiller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: tiller namespace: kube-system

Maesh需要本地存储

--- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-path provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: local-storage spec: capacity: storage: 1Gi # volumeMode field requires BlockVolume Alpha feature gate to be enabled. volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: local-storage local: path: /disks/ssd1 nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-m1 - k8s-n2 - k8s-n3

Maesh Helm包安装

helm repo add maesh https://containous.github.io/maesh/charts helm repo update helm init --service-account tiller helm list helm del --purge maesh helm install --name=maesh --namespace=maesh maesh/maesh kubectl -n kube-system get pods,deployment,svc kubectl get all -n maesh kubectl delete deployment tiller-deploy --namespace=kube-system kubectl delete svc tiller-deploy --namespace=kube-system

Maesh 实例

K8S高级之ServiceMesh服务治理

服务网格是一个专门的基础设施层,用来处理服务到服务之间的通信。服务网格用来解决微服务运维方面的一些复杂性,它围绕可观测性、重试/超时、服务发现以及动态代理等方面的最佳实践进行了封装,上述所提及的这些关注点通常会妨碍微服务的部署。

服务网格领域的几个流行工具包括:Istio、 Linkerd2、Cilium、Consul Connect

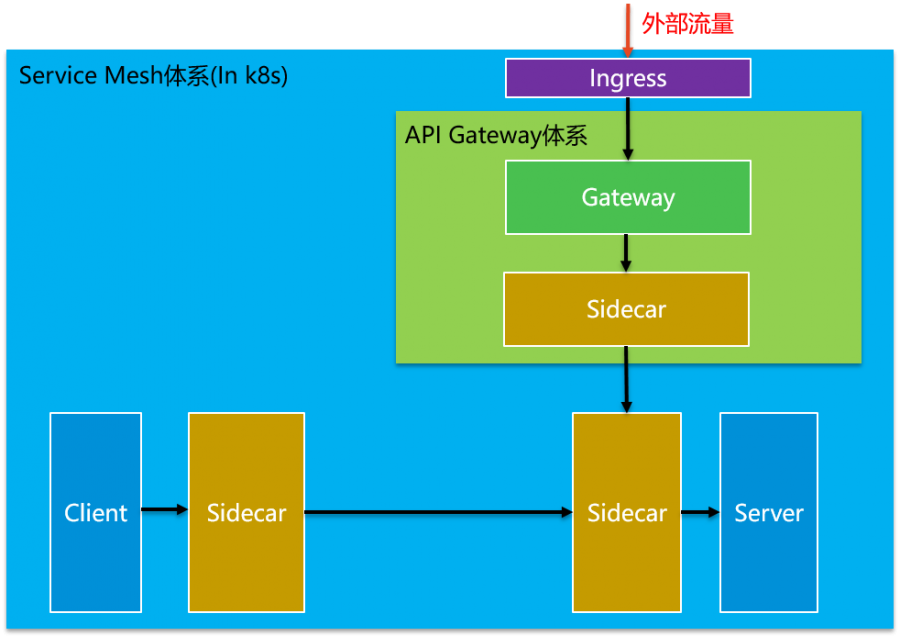

♥ 通过Service Mesh的控制平面,控制范围从微服务体系扩展到了API Gateway体系。

将API Gateway纳入Service Mesh体系

参考资料

socat是一个多功能的网络工具,支持广播和多播、抽象Unix sockets、Linux tun/tap、GNU readline 和 PTY。

socat的主要特点就是在两个数据流之间建立通道;且支持众多协议和链接方式:ip, tcp, udp, ipv6, pipe,exec,system,open,proxy,openssl,socket等。

istio手动加入sidecar

kubectl apply -f install/kubernetes/istio-demo.yaml

早期的做法

cd istio-0.7 kubectl create -f istio-sidecar-injector-configmap-release.yaml --dry-run -o=jsonpath='{.data.config}' > inject-config.yaml kubectl -n istio-system get configmap istio -o=jsonpath='{.data.mesh}' > mesh-config.yaml istioctl kube-inject \ --injectConfigFile inject-config.yaml \ --meshConfigFile mesh-config.yaml \ --filename path/to/original/deployment.yaml \ --output deployment-injected.yaml kubectl apply -f deployment-injected.yaml

istio做内部域名测试

kubectl -n istio-system exec -ti prometheus -- nslookup istio-statsd-prom-bridge

istio做端口映射转发

grafana

kubectl -n istio-system port-forward \ $(kubectl -n istio-system get pod -l app=grafana -o jsonpath='{.items[0].metadata.name}') \ 3000:3000 & iptables -t nat -A PREROUTING -p tcp -i eth0 --dport 3000 -j DNAT --to-destination 127.0.0.1:3000

servicegraph

kubectl -n istio-system port-forward \ $(kubectl -n istio-system get pod -l app=servicegraph -o jsonpath='{.items[0].metadata.name}') \ 8088:8088 & iptables -t nat -A PREROUTING -p tcp -i eth0 --dport 8088 -j DNAT --to-destination 127.0.0.1:8088 http://192.168.13.142:8088/dotviz

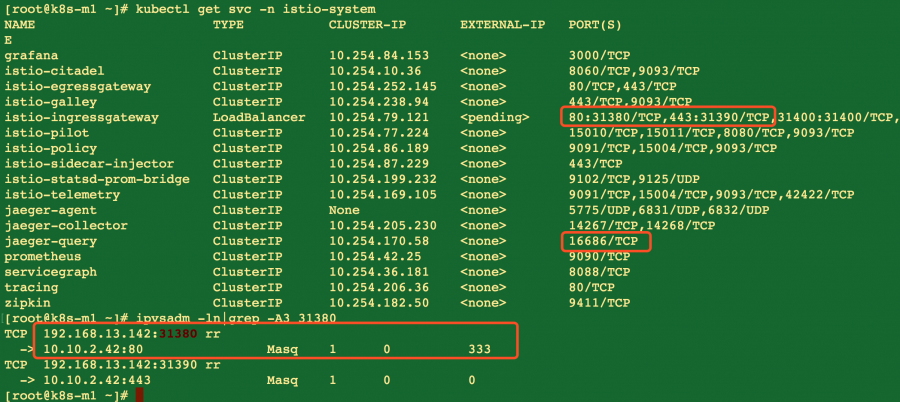

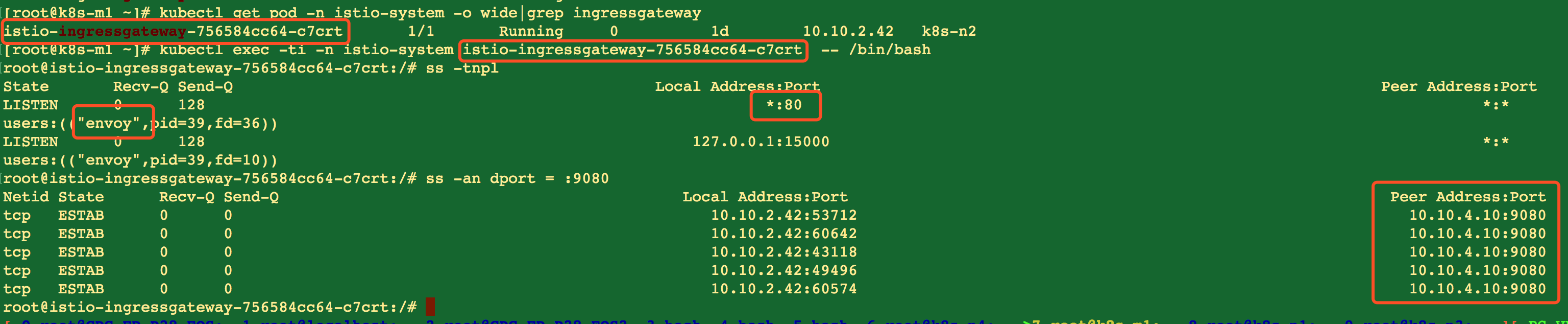

istio直接使用iptables做转发

INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}') SECURE_INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="https")].nodePort}') INGRESS_HOST=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT src=`ip -4 route get 1.1.1.1|awk '/src/{print $NF}'` kubectl get svc -n istio-system | awk '{ \ if($1 ~ /grafana/ || $1 ~ /servicegraph/ || $1 ~ /jaeger-query/) { \ gsub("/TCP","",$5);print "iptables -t nat -A PREROUTING -p tcp -d","'$src'","--dport",$5,"-j DNAT --to-destination",$3":"$5 \ } \ }'

istio+bookinfo实践

kubectl apply -f istio-demo.yaml kubectl apply -f <(istioctl kube-inject -f samples/bookinfo/platform/kube/bookinfo.yaml) kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml kubectl apply -f samples/bookinfo/networking/destination-rule-all.yaml kubectl apply -f samples/bookinfo/networking/virtual-service-all-v1.yaml

istio服务中的几个关键词

Gateway

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: bookinfo-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 80 name: http protocol: HTTP hosts: - "*"

DestinationRule

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews

spec:

host: reviews

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

- name: v3

labels:

version: v3

VirtualService

apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: bookinfo spec: hosts: - "*" gateways: - bookinfo-gateway http: - match: - uri: exact: /productpage - uri: exact: /login - uri: exact: /logout - uri: prefix: /api/v1/products route: - destination: host: productpage port: number: 9080

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

spec:

hosts:

- reviews

http:

- route:

- destination:

host: reviews

subset: v1

apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: reviews spec: hosts: - reviews http: - route: - destination: host: reviews subset: v1 weight: 50 - destination: host: reviews subset: v3 weight: 50

🏆用Kubeadm搭建k8s集群

参考资料

初始化系统环境

ansible-playbook -i lists_k8s main.yml -e "host=all" -t init # 初始化跟自动化脚本安装k8s的步骤一致

可以通过kubelet的启动参数–fail-swap-on=false更改这个限制。

从 v1.10 开始,部分 kubelet 参数需在配置文件中配置 –kubeconfig=

导入仓库

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF

安装工具

yum makecache fast yum install -y kubelet kubeadm kubectl systemctl enable kubelet ln -s /usr/libexec/docker/docker-runc-current /usr/local/bin/docker-runc # 如果docker version <= 1.13

初始化安装

kubeadm reset # 先清理环境 kubeadm init \ --kubernetes-version=v1.10.3 \ --pod-network-cidr=10.244.0.0/16 \ --apiserver-advertise-address=0.0.0.0

状态检查

kubectl get cs kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-9mU9zhj0Q_OA3DyPeupKZrirTsOMLM0Ahl2Mjit6bEI 18m kubelet-bootstrap Approved,Issued node-csr-HrGdN7amR0Vp1zSfvPFZMbD89U3R9nkHnVViCm-d_O8 18m kubelet-bootstrap Approved,Issued node-csr-RPntnuINm2iVEYUKGSDLJHkyta9yq75mTZnFa_g9OaE 18m kubelet-bootstrap Approved,Issued Approved = controller-manager没有配置好,检查是否加上了ssl授权 Approved,Issued = 确认授权 OK

kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION k8s-m1 NotReady <none> 1m v1.11.0 k8s-n1 NotReady <none> 1m v1.11.0 k8s-n2 NotReady <none> 1m v1.11.0 NotReady = nodes间的网络有问题

kubectl get pods -o wide --all-namespaces

配置pod network

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml #kubectl apply -f http://mirror.faasx.com/kubernetes/installation/hosted/kubeadm/1.7/calico.yaml

master参与工作负载

kubectl taint nodes k8s-m1 node-role.kubernetes.io/master-

安装dashboard插件

用Weave Scope监控集群

使用kubeconfig文件配置跨集群认证

K8S常见问题集锦

定位问题命令

kubectl logs kubectl get events kubectl describe pod

overlay2: the backing xfs without d_type

当Docker 运行在overlay2存储驱动上时,需要d_type特性的支持才能正常工作,Docker1.13以后加入了对此的检查,可以运行docker info命令来查看文件系统是否支持d_type这个特性。

如果overlayfs存储驱动不支持d_type的话,容器在操作文件系统时可能会出现一些奇怪的错误:比如在bootstrap的时候出现Chown error,或者rebuild时发生错误等等。

mkfs.xfs -f -l internal,lazy-count=1,size=128m -i attr=2 -d agcount=8 -i size=512 -n ftype=1 /dev/vdb

docker-runc not installed on system

journalctl -xeu docker

ln -s /usr/libexec/docker/docker-runc-current /usr/local/bin/docker-runc如果是使用docker 1.13之前的版本,需要做 docker-runc的软链

no API token found for service account

修改/etc/kubernetes/apiserver文件中KUBEADMISSIONCONTROL参数。

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"去掉“ServiceAccount”选项。

重启kube-apiserver服务

# systemctl restart kube-apiserver。

SecurityContext.RunAsUser is forbidden

修改/etc/kubernetes/apiserver文件中KUBEADMISSIONCONTROL参数。

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"去掉“SecurityContextDeny”选项。

重启kube-apiserver服务

# systemctl restart kube-apiserver。

localhost:8080 was refused

注意到kube-apiserver的选项–insecure-port=0,也就是说kubeadm 1.6.0初始化的集群,kube-apiserver没有监听默认的http 8080端口。

所以我们使用kubectl get nodes会报

The connection to the server localhost:8080 was refused - did you specify the right host or port?。查看kube-apiserver的监听端口可以看到只监听了https的6443端口,

为了使用kubectl访问apiserver,在~/.bashprofile中追加下面的环境变量: export KUBECONFIG=/etc/kubernetes/admin.conf source ~/.bashprofile 此时kubectl命令在master node上就好用了

pod服务一直处于ContainerCreating(先排除被墙)

# kubectl get pod # kubectl describe pod通过日志排查,发现没有rhsm,尝试安装yum install rhsm ,不使用redhat的pause镜像,就没有这个问题。

User cannot create resource "certificatesigningrequests" in API group "certificates.k8s.io" at the cluster scope

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

kubectl delete clusterrolebinding kubelet-bootstrap [root@k8s-m1 kubernetes]# kubectl get csr -o wide --all-namespaces NAME AGE REQUESTOR CONDITION node-csr-TgFxbCj01ebWz-JQEwrMT5_IAa76Mg5GqgYdPvp2dT8 84s system:bootstrap:c7i6gd Pending

User cannot list resource "nodes" in API group "" at the cluster scope

少了角色, 需要加角色

kubectl create clusterrolebinding kubelet-nodes --clusterrole=system:node --group=system:nodes

csr证书状态一直为Pending

基于安全性考虑,CSR approving controllers 不会自动 approve kubelet server 证书签名请求,需要手动 approve:

kubectl certificate approve node-csr-TgFxbCj01ebWz-JQEwrMT5_IAa76Mg5GqgYdPvp2dT8

kubectl node01 notready

检查/etc/resolve.conf,检查master是否能够连通?

Unable to update cni config: No networks found

journalctl -xeu kubelet

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml或者运行kube-router

kubectl apply -f https://raw.githubusercontent.com/cloudnativelabs/kube-router/master/daemonset/kube-router-all-service-daemonset.yaml

network: no IP ranges specifie

Failed to get pod CIDR from CNI conf file

kubectl get pods -o wide --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system kube-router-pn5mn 0/1 CrashLoopBackOff 3 2m34s 192.168.13.136 k8s-n2 <none> <none> [root@k8s-m1 kube-router]# kubectl -n kube-system logs kube-router-pn5mn Failed to parse kube-router config: Failed to build configuration from CLI: Error loading config file "/var/lib/kube-router/kubeconfig": read /var/lib/kube-router/kubeconfig: is a directory

rm -rf /var/lib/kube-router/kubeconfig && touch /var/lib/kube-router/kubeconfig

unknown container "/system.slice/kubelet.service"

在kubelet中追加配置

--runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice

Failed to get system container stats for

failed to get cgroup stats for

failed to get container info for

–runtime-cgroups=/systemd/system.slice –kubelet-cgroups=/systemd/system.slice

kubernetes 1.9 与 CentOS 7.3 内核兼容问题

kubernetes 1.9 与 Kernel内核兼容问题

crictl not found in system path

手动安装一下 crictl 软件

kubectl get node No resources found

Failed to create ["kubepods"] cgroup

升级 systemd : yum update systemd

kubernetes如何删除nodes

kubectl delete node 10.31.31.29

pod status is Completed but not Succeeded

kubectl describe pod istio-cleanup-secrets -n istio-system

Unable to authenticate ... invalid bearer token

检查kuber-apiserver启动参数中的token.csv和kubelet启动参数中指定的bootstrap文件bootstrap.kubeconfig中的token值是否一致,此外该token必须为实际数值,不能使用变量代替。

unable to do port forwarding: socat not found

yum install -y socat

如何安装高版本的docker

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

yum list docker-ce.x86_64 --showduplicates |sort -r

yum install -y --setopt=obsoletes=0 \

docker-ce-17.03.2.ce-1.el7.centos \

docker-ce-selinux-17.03.2.ce-1.el7.centos

暂时停用某个节点

kubectl delete node xxx

调整某个节点的权重

kubectl label node k8s-m1 cpu=high kubectl label node k8s-n2 cpu=mid kubectl label node k8s-n1 cpu=low ...... spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: cpu operator: In values: - high - mid

迁移服务到别的节点

禁止pod调度到该节点上

kubectl cordon <node>

驱逐该节点上的所有pod

kubectl drain <node>

该命令会删除该节点上的所有Pod(DaemonSet除外),在其他node上重新启动它们,通常当该节点维护完成,启动了kubelet后,再使用

kubectl uncordon <node>

即可将该节点添加到kubernetes集群中。

统一修改pods的timezone

因此可以在编写kubernetes的yaml文件时添加如下内容,即可以将所有docker的时区配置为想要的

......

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

......

volumes:

- name: tz-config

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

helm部署的权限问题

创建tiller用户角色

apiVersion: v1 kind: ServiceAccount metadata: name: tiller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: tiller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: tiller namespace: kube-system

删除旧的tiller-deploy

kubectl delete deployment tiller-deploy --namespace=kube-system kubectl delete svc tiller-deploy --namespace=kube-system

重新初始化Helm并指定要使用的 Service Account

$ helm init --service-account tiller

local-path not found

local volume, 这是一个很新的存储类型,建议在k8s v1.10+以上的版本中使用。该local volume类型目前还只是beta版。

Local volume 允许用户通过标准PVC接口以简单且可移植的方式访问node节点的本地存储。 PV的定义中需要包含描述节点亲和性的信息,k8s系统则使用该信息将容器调度到正确的node节点。

--- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-path provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: local-storage spec: capacity: storage: 1Gi # volumeMode field requires BlockVolume Alpha feature gate to be enabled. volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: local-storage local: path: /disks/ssd1 nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-m1 - k8s-n2 - k8s-n3

RunAsUser is forbidden

最简单的方法:

- 对准入控制器进行修改,SecurityContextDeny 不enable就行,然后重启apiserver

--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota,ServiceAccount,NodeRestriction

如何修改Kubernetes节点IP地址?

kubernetes 升级到1.24的笔记

Kubernetes 1.23 中可以使用 Docker Engine,但如果使用 Docker Engine 作为运行时,kubelet 启动时,会打印一个警告日志。您在 1.23 之前的所有版本中都会看到此警告。dockershim 将在 Kubernetes 1.24 移除。

unable to determine runtime API version docker

使用containerd还是cri-o

为了更贴近OCI,k8s又搞了一个轻量级的容器运行时cri-o,所以在k8s“抛弃”dockershim后,可供我们选择的容器运行时有containerd和cri-o

- 早期: kubelet → docker-manager → docker

- 中期: kubelet → CRI → docker-shim → docker → containerd → runc

- 中期: kubelet → CRI → cri-containerd → containerd → runc

- 当前: kubelet → CRI → containerd(CRI plugin) → runc

- 当前: kubelet → CRI → cri-o → runc

K8s 2.0展望

- 把YAML换成更靠谱的HCL语言,就像把算盘升级成计算器

- 允许换掉etcd这个“独裁者”,就像手机能换电池

- 内置应用商店(受够Helm这个祖传脚手架了!)

- 默认支持IPv6,解决IP地址不够用这个世纪难题